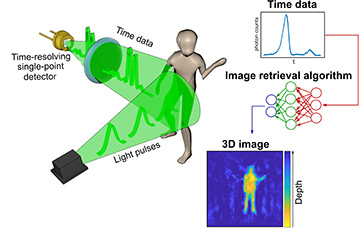

In imaging with time measurements, the scene is flash-illuminated with a pulsed laser (left). A single-point detector records the arrival time of photons from all over the scene in the form of a time histogram (top right), which is processed by an image retrieval algorithm that renders an image in 3D (bottom right).

In imaging with time measurements, the scene is flash-illuminated with a pulsed laser (left). A single-point detector records the arrival time of photons from all over the scene in the form of a time histogram (top right), which is processed by an image retrieval algorithm that renders an image in 3D (bottom right).

Obtaining an image of a scene is obvious and intuitive. The light reflected from every object of the scene is collected with lenses and projected (imaged) onto an array of pixels (a sensor) that records the light intensity. This basic operating principle lies behind cameras such as the ones in mobile phones.

A more recently developed alternative paradigm, single-pixel imaging,1 relies on the use of an array of pixels not in the detector, but in the illumination. By spatially scanning the scene in some form and measuring the total intensity that is back-reflected from the scene with a single-pixel sensor, it is possible to form an image. Extending these approaches in 3D is possible via, for example, stereovision2 and time-of-flight techniques.3

Forming an image without spatial sensing of the scene, either in the detector or in the illumination, would seem an impossible task. In our recent work, however, we have asked ourselves precisely how this might be done. To tackle the problem, we used a single-point sensor with time-resolving capabilities—that is, a sensor that does not have spatial resolution, but measures the arrival time of photons from the scene.4

In our approach, the scene is flood-illuminated with a pulsed laser, and the return light is focused and collected with a single-point, single-photon avalanche diode (SPAD) detector. The detector provides the arrival time of the return photons from the whole scene in the form of a temporal histogram, which is then used by an AI algorithm to render an estimate of the scene in 3D. By using this approach, we demonstrated 3D imaging of different scenes, including multiple humans, up to a depth of 4 m.

The fact that one time-resolving single-pixel sensor is enough for spatial imaging broadens the remit of what is traditionally considered to constitute image information. The same concept is transferable to any device capable of probing a scene with short pulses and precisely measuring the return “echo”—for example, radar and acoustic distance sensors. This opens multiple possibilities for imaging and sensing with existing technology, with a special interest for applications in autonomous vehicles, smart devices and wearable technology.

Researchers

Alex Turpin, Gabriella Musarra, Valentin Kapitany, Francesco Tonolini, Ashley Lyons, Ilya Starshynov, Roderick Murray-Smith and Daniele Faccio, University of Glasgow, Glasgow, U.K.

Federica Villa and Enrico Conca, Politecnico di Milano, Milan, Italy

Francesco Fioranelli, Technische Universiteit Delft, Delft, Netherlands

References

1. M.P. Edgar et al. Nat. Photon. 13, 13 (2019).

2. S.T. Barnard and M.A. Fischler. ACM Comput. Surv. 14, 553 (1982).

3. P. Dong and Q. Chen. LiDAR Remote Sensing and Applications (CRC Press, 2017).

4. A. Turpin et al. Optica 7, 900 (2020).