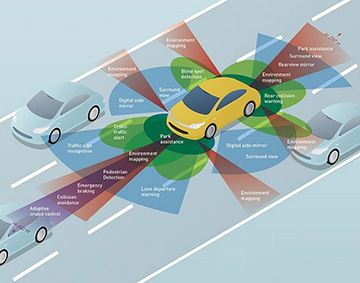

Autonomous vehicles encompass a variety of sensor types for different purposes, including long-range radar (purple), short- and medium-range radar (light green), ultrasound (dark green), optical cameras (blue) and lidar (red). [Image: OPN, January 2018/Infographic by A. Kirkland and J. Hecht] [Enlarge image]

Lidar, particularly for the autonomous-vehicle revolution, is one of the hottest of hot topics today in optics and photonics. But what kind of lidar? The technology’s deceptively simple five-letter name packs in a complex array of choices, from spinning car-top sensors to tiny solid-state lidar scanners largely still on the drawing boards.

In an invited talk at a session on optical solutions for autonomous driving at CLEO 2019 in San Jose, Calif., USA, Jake Li—the business development manager for automotive lidar with the Japan-based photonics component supplier Hamamatsu—walked the audience through the optical concepts and challenges bubbling beneath the surface in lidar for self-driving cars. The picture that he sketched out was very much one of a technology in flux, with the winners and losers for each technology component unlikely to emerge for some time.

One of several sensor systems

Li stressed that Hamamatsu itself doesn’t make lidar systems—only the core optical elements (photodetectors, light sources and others) that go into them. That, presumably, gives the company a fairly broad, high-level view of the dizzying array of technology options and subsystems that go into an automotive-lidar installation, as well as the other parts of autonomous-vehicle sensing such as radar and camera modules.

Jake Li. [Image: Courtesy of J. Li]

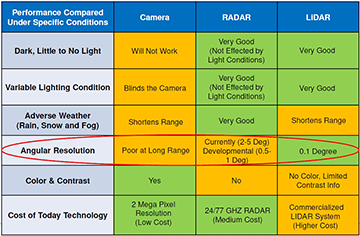

For lidar, Li pointed out, angular resolution is the big thing that separates it from other onboard sensors. “If you want object classification, you need to know that you’re seeing what the object is, at close range or long range.” Lidar’s 0.1 degree angular resolution “basically allows that to happen,” in contrast to systems like radar. Lidar also has some advantages over camera systems, which have trouble in low-lighting and variable-lighting conditions that can temporarily “blind” the camera. Some camera vendors, Li said, are trying to overcome these limitations with (potentially cost-raising) innovations such as near-IR imaging and high dynamic range.

Lidar does share one key disadvantage of cameras, however: Both suffer in adverse weather conditions. Component vendors are working on improvements both in hardware and in image-cleaning algorithms. Those can help, Li said, but at a cost—and while “you would definitely see some improvement in the future, 100 percent working under snow, fog and rain won’t be easily done.”

Cost containment

One of the biggest issues with lidar at present, though, is cost, which can amount to tens of thousands of dollars for some units. But the numbers have been dropping, according to Li, and should continue to do so, owing to efforts by component suppliers to cut costs, to increases in volume, and to improvements in manufacturability with increases in automation.

Li listed some of the strengths and weaknesses of three core sensing technologies in autonomous vehicles—with a particular focus on lidar’s key relative strength, angular resolution. [Image: Courtesy of J. Li] [Enlarge image]

A more rational design approach could also make a difference. “In the past, lidar design has been basically, you try to get a functional lidar out there and show that it works, and then you try to get funding and improve the design afterward.” Li said. “That probably is all changing now, too,” with new lidar systems being done with design and simplicity in mind, and that should help reduce the cost of lidar in the future.

Whatever the cost, Li noted that there are now 70 to 80 companies trying to do lidar systems, including a mix of dedicated lidar designers and units of Tier 1 automotive OEMs. On the flip side, VC and other funding appears to be hitting a plateau and, according to Li, “funding activity will start to dry up” and trend down in the future. In light of that environment, “some of the companies probably have to start showing that they’re able to produce a system that functions in a real environment to get the funding,” he said.

Time-of-flight versus FMCW

Li next dug into some of the numerous systems and component choices confronting lidar makers. The highest-level division, he said, is between time-of-flight (ToF) lidar, which pulls a distance measurement from the flight time of the laser pulse, and frequency-modulated continuous-wave (FMCW) systems, which extracts time and velocity information from the frequency shift of returning light. ToF lidar is “a very simple concept,” according to Li, “but it’s very complex to make a high-resolution lidar out of this.” FMCW’s more information-rich data stream is potentially attractive, but has complexities of its own.

Even today, Li suggested, mechanically spinning car-top sensors, such as this one from Velodyne, remain an industry benchmark. [Image: Steve Jurvetson/Wikimedia Commons]

For ToF lidar, beam steering is a major area of development. Curiously, rotational beam steering—the familiar car-top spinning scanners popularized by Velodyne, and used in “many cars you see on the road”—is still, according to Li, “the benchmark, even today.” Such systems, said Li, “work great for everything except consumer vehicles,” though it’s likely they’ll eventually be replaced by solid-state beam-steering solutions.

Other mechanical beam steerers include smaller units using polygonal or galvo mirrors, and very compact systems using MEMS mirrors. The latter, according to Li, still need to prove that they can survive in harsh automotive environments, amid vibrations and temperature swings from -40 °C to 105 °C. But he said that they “may give the best balance of cost and performance until solid-state concepts mature.”

Solid-state lidar

Those solid-state solutions include so-called flash lidar, which does not rely on beam scanning at all. For InGaAs-based flash lidar systems, Li noted, costs are higher, but for short-range applications, silicon prices remain manageable. For longer-range applications, though, there’s a continued need to develop better light sources and detectors while driving costs down.

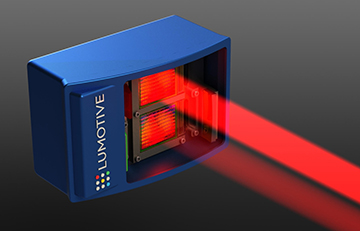

Other solid-state concepts for ToF lidar include optical phased arrays, which at this point are also limited to short ranges, and liquid-crystal metasurfaces. In the latter—a “fairly new concept” by the start-up firm Lumotive—“by tuning the voltage you can basically change the direction of the beam,” Li explained. “In theory it should work, but once again, it still has to be proven in the real world.”

A start-up company, Lumotive, has developed a solid-state lidar unit that uses voltage tuning on a liquid-crystal metasurface to steer the beam. [Image: Lumotive]

Li said that the other approach to lidar, FMCW, has a “ton of benefits” relative to ToF—particularly FMCW’s tolerance to ambient noise and its ability to get out to long distances. But, as with ToF lidar, FMCW is currently grappling with the transition from mechanical to solid-state beam steering, and component costs for light sources is high. Still, Li noted, this could conceivably be an “excellent concept” for fleet vehicles.

Summing up, Li said that “There’s really not a final winner yet” between ToF and FMCW. “At least today, companies will need to use one or two or three lidar concepts,” to address different operating ranges. “We’re still deciding which is the perfect lidar system down the line.”

Optical challenges

Whether ToF or FMCW, lidar systems have a number of technical challenges that fall squarely in the realm of optics. Li focused the end of his talk on two optical challenges in particular. One is the need for compact, economical, high-peak-power light sources capable of producing nanosecond pulses. And the other, equally demanding requirement is for effective collection optics and detectors that can deal with extremely low photon budgets. “Only a small fraction of emitted photons will return to the detector” even in the best case, Li said.

On the light-source side, the most familiar distinction is between 1550-nm sources, which can operate at higher power (and, thus, longer ranges) without compromising eye safety, and more economical 905-nm systems, which “have been there forever” but must operate at lower power and ranges for eye safety.

Interestingly, while there’s much focus on the maximum range of a given lidar system—which depends on a host of factors including ambient noise, photon budget, filtering and eye safety—the minimum measurable distance is also relevant. In practice, Li explained, for reasons related to detector saturation, “there’s a blind range where the system can’t see anything.” And other factors such as optical alignment, electronic and photodetector jitter, photodetector excess noise, and pulse duration, can also affect performance.

Perfect choices elusive

As with lasers, Li noted, each detector type—from avalanche photodiodes to silicon-based single-photon avalanche detectors—has its weaknesses and strengths. “There’s not a ‘perfect’ detector,” he said. “There are a lot of choices out there, and we’re still making choices today.”

Those, Li said, are some of the optical challenges, not all of them—and, he added “there are tons of other challenges beyond optics.” Component suppliers need to meet the increasingly customized requirements of their automotive clients, and the exacting qualification and reliability standards of the automotive industry. For optics suppliers trying to stake out a position in the lidar game, it all makes for an interesting state of play.