![]()

Rice University grad student Yicheng Wu, a coauthor on the study, with the experimental setup. [Image: Jeff Fitlow/Rice University]

Synthetic-aperture radar (SAR) has been a staple of radio imaging and surveillance for more than half a century. But translating the power of synthetic apertures to long-range optical imaging has proved a tough proposition.

Scientists at Rice University and Northwestern University, USA, now report that they’ve developed a method to create synthetic apertures for imaging in the optical domain—by borrowing a technique from cutting-edge computational microscopy (Sci. Adv., doi: 10.1126/sciadv.1602564). The research team believes that the technique, which currently works only with a coherent light source, “paves the way” toward sub-diffraction-limited optical imaging at standoff distances.

Bigger aperture, higher resolution

In SAR, a moving radar platform, such as a satellite or airplane, sends repeated radar pings to the target area, capturing complete complex-field information (both amplitude and phase) from the radar echoes, at picosecond time resolution. The multiple radar returns are then computationally combined into a single radar image.

By covering a wide distance (on the order of tens of kilometers) the airplane effectively increases the size of the imaging aperture to many orders of magnitude larger than that of the physical aperture. And, since any imaging system’s resolution depends in part on the aperture size, the creation of this huge “synthetic aperture” dramatically increases the resolution of the resulting radar images.

But creating an analogous synthetic aperture for long-range optical imaging runs into some fundamental technical problems. While picosecond time resolution is fine for radar, much-shorter-wavelength optical signals would require continuous recording with sub-femtosecond resolution to pull off the same trick. And digital camera sensors for visible light currently record only the intensity part of the complex field, losing the phase information that’s also crucial to synthetic-aperture imaging. As a result, getting higher resolution in long-range optical imaging and surveillance usually involves just fashioning a bigger lens or series of lenses—a technique that quickly adds weight, size and complexity.

Tapping computational microscopy

The Rice–Northwestern team sought to get around those limitations by creating an approach for synthetic apertures for visible imaging (SAVI). To do so, they drew on research from the field of computational microscopy—and, in particular, on the technique of Fourier ptychography (FP).

Developed and extended by Changhuei Yang of the California Institute of Technology, Laura Waller of the University of California, Berkeley, and others, FP involves capturing multiple micrographic images illuminated by banks of LED lights, and then computationally combining the redundant images, recovering the phase information algorithmically rather than through direct measurement. In principle, the technique allows an ordinary lab microscope to be converted into a gigapixel imaging platform.

Extending the technique to long-range imaging offered a few challenges, however. FP microscopy setups have tended to use light transmitted through samples from below, whereas a standoff imaging system would need to use a reflected field. And, of course, the separation between the imaging camera and the object to be imaged increases by orders of magnitude.

Moving camera

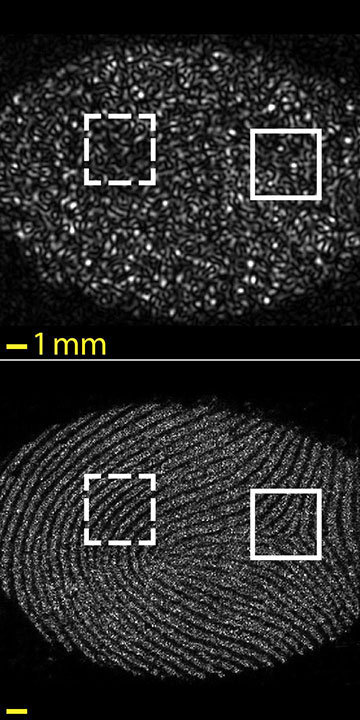

The Rice–Northwestern team’s synthetic-aperture technique for visible light was able to capture details as fine as the ridges on a fingerprint one meter away (bottom), by combining and processing multiple speckled reflected-light images (top) captured by a moving camera at various angles. [Image: Jason Holloway/Rice University]

In their experimental setup, the Rice–Northwestern researchers, led by OSA Member Ashok Veeraraghavan, illuminated an optically rough object 1 m distant using a 532-nm diode-laser source. A machine-vision camera with a CMOS image sensor, and a physical aperture size of 2.5 mm, captured repeated, overlapping images as the camera moved along a path parallel to the object—in much the way that an SAR-equipped airplane, moving above Earth’s surface, would track and image a target on the ground. The resulting synthetic aperture size was 15.1 mm—six times larger than the lens aperture.

The image acquisition left the team with a series of overlapping low-res, speckled reflected images. Using FP computational techniques to recover the phase information and reduce speckle, they found they could combine and reconstruct these low-res samples into a much higher-res image—achieving a sixfold improvement in resolution, in keeping with the increase in synthetic-aperture size. The technique allowed the recovery of crisp, detailed images of a fingerprint’s ridges from a meter away.

The team notes that the SAVI system still has a number of challenges to meet before it can be scaled up to a production implementation. At this point it depends on coherent light, and atmospheric turbulence could prove a significant impediment to imaging at truly long distances. Still, the researchers believe that further research can overcome these problems, raising the prospect of a “full-scale imaging platform in the near future.”