Ommatius ouachitensis (robber fly). [Getty Images]

Ommatius ouachitensis (robber fly). [Getty Images]

There is an increasing demand for robust, rapid-response computer vision systems. But perceptions of the natural world can be overwhelming in their complexity. Given that the computational costs of machine learning grow faster than the size of computed data, how can we scale machine learning for large volumes of data—including patterned or delayed information embedded as “noise”?

Flies and other insects have evolved optical and neural mechanisms that rapidly and efficiently filter visual information to find what is important amid variable environmental conditions.

Clearly, as human beings, evolution has provided us with the ability to efficiently navigate an ever-changing world, interpret our experience and make decisions—an astonishing capability, given the immense volumes of data that we process, its moderate likelihood of being distorted, its fickle temporal availability and its variable information content. Flies and other insects, facing their own version of this problem, have evolved optical and neural mechanisms that rapidly and efficiently filter visual information to find what is important amid variable environmental conditions. In thinking about the data-processing demands of machine vision, we can probably learn a lot from insects.

In this feature article, we look at some of the ways insect visual systems have solved the information problem—and how those solutions could inform computer vision architectures. After summarizing some essential aspects of insect vision and their intricate optical and neural components, we propose an insect-inspired design framework that involves optical encoding, sparse sampling with spatial compression, and shallow hardware postprocessing. Such a framework, we believe, can produce machine vision systems that can reliably extract higher-level signals in the face of noise—and can do so with lower computational costs than systems based on deep learning.

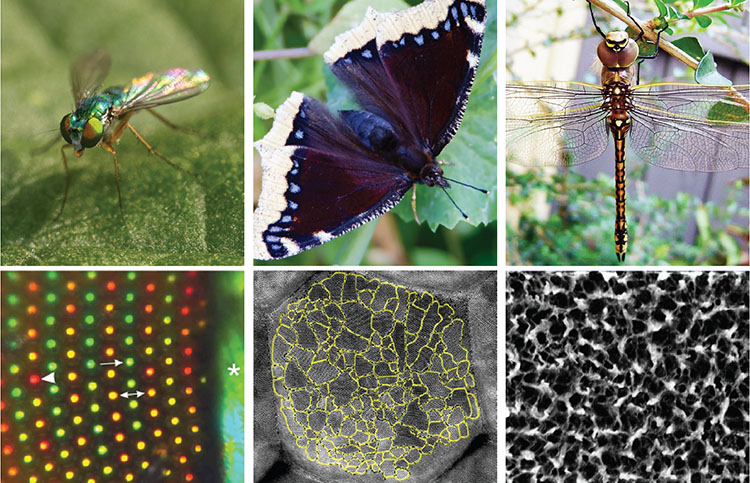

Insect eyes showing multiscale, meso-ordered optics. Left: A green long-legged fly (top) and its characteristic facet pattern with alternating rows of corneal interference reflectors (bottom). Center: The Mourning Cloak, a diurnal butterfly (top) and the polycrystalline domains of corneal nanostructures on one of its facet lenses (bottom). Right: A Hemianax papuensis dragonfly (top) with fractal nanostructures (bottom) on its wings. [D.G. Stavenga et al. J. Comp. Physiol. A 203, 23 (2017) / K. Lee et al. Sci. Rep. 6, 28342 (2016) / E.P. Ivanova et al. PLOS One 8, e67893 (2013); CC-BY 4.0]

Insect eyes showing multiscale, meso-ordered optics. Left: A green long-legged fly (top) and its characteristic facet pattern with alternating rows of corneal interference reflectors (bottom). Center: The Mourning Cloak, a diurnal butterfly (top) and the polycrystalline domains of corneal nanostructures on one of its facet lenses (bottom). Right: A Hemianax papuensis dragonfly (top) with fractal nanostructures (bottom) on its wings. [D.G. Stavenga et al. J. Comp. Physiol. A 203, 23 (2017) / K. Lee et al. Sci. Rep. 6, 28342 (2016) / E.P. Ivanova et al. PLOS One 8, e67893 (2013); CC-BY 4.0]

From bio-inspiration to “biospeculation”

Fast-flying insects such as flies offer a particularly illuminating place to start. As anyone who has attempted to swat one can attest, flies have record-speed reflexes, as measured by their light-startle response. They physically react to a flash within milliseconds, faster than a typical camera frame rate. A speedy fly can travel about 10 m/s, covering a thousand times its body length in only a second—the equivalent of Superman flying at 10 times the speed of sound. The fly manages a range of impressive, high-speed maneuvers in response to visual stimuli—and the mechanisms it has evolved for doing so may well offer a key for robust high-speed analyses in optical image processing and future camera designs.

Moths offer another inspiring case. These insects can fly and find their way in cluttered environments at extremely low light levels and see with sensitive color vision. Their special eye structures and visual capacities may well provide an example for low-light camera systems.

These insect examples for imaging technology, however, raise other questions. How can computer vision systems actually achieve the robust, high-speed signal processing of flying insects? How do insects find each other in noisy environments? How do they efficiently sample data with so few receptors and quickly process the information with their small brains?

Extensive studies on insects’ visual sense suggest many things that would be desirable to reverse-engineer. Today’s high-field-of-view optics are often heavy or bulky; lidar scanning systems have a limited angular acuity; computer vision approaches can be complex and energy intensive. Most unmanned aircraft systems (UAS) currently offload computing to ground-based computers or use GPS or other networked, distributed data for navigation. The fly’s obstacle-avoidance and landing abilities would be useful in UAS technology. Insects’ capacity to locate each other—again, despite having few sensors—could inspire the development of tranceivers for free-space communication systems. Arthropod-inspired designs have already been successfully applied in wide-field-of-view and 3D cameras.

We should be cautious about interpreting too much function from form. Nature does not globally optimize, but rather evolves based on adjacent possible steps. Though we might associate form and function, insect morphology may not always produce the specific functions we impute to it. Current UAS integrated vision sensor technologies do not mimic biological systems directly, but instead advance through improvements based on subtle understanding of insects’ systems.

Research in this domain thus involves considerable “biospeculation,” through which one can move beyond bio-inspiration to inverse-engineer and assert the possible functionality apparently possessed by insects. Biospeculation deals with functions that, while they may exist, would be hard to validate—yet, nonetheless, they serve the conceptual development of technological applications.

Insect eyes, insect brains

Extensive studies on arthropod eyes, especially in recent decades, have yielded detailed insight into their inner workings. Insects have compound eyes composed of numerous anatomically identical building blocks, the ommatidia, each one capped by a facet lens. This structure allows an easy estimation of the number of “pixels” of an insect eye. In the tiny fruit fly Drosophila, about 800 ommatidia make up an eye; in the larger dragonflies, this number can go up to 20,000. In a 2009 review of the fruit fly’s imaging system, the neurobiologist Alexander Borst asked rhetorically: “Who would buy a digital camera with a fisheye lens and a 0.7 kilopixel chip, representing a whole hemisphere by a mere 26×26 pixels?” Flies quite sensibly do.

The construction of the eyes, polarization and spectral properties of the photoreceptors, and organization of neuronal processing strongly correlate with an organism’s lifestyle and habitat.

Fly eyes have eight photoreceptors per ommatidium, but in most other insects, such as bees, butterflies and dragonflies, an ommatidium contains nine photoreceptor cells, each with a characteristic spectral and polarization sensitivity. Together, each of these pixels captures information from a limited angular space, generally on the order of one degree half-width.

The facet lenses focus incident light onto the photoreceptors, where it is absorbed by their visual pigment molecules, the rhodopsins. These molecules trigger a molecular phototransduction chain, which depolarizes the photoreceptor’s membrane potential. This is then fed forward to the higher neuronal ganglia, which together form the visual brain.

Elements of insect eyes

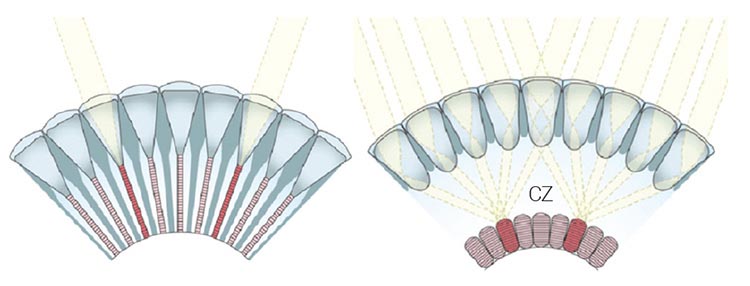

Left: In apposition eyes, ommatidia are optically isolated. The rhabdomeres—visual pigment-containing organelles of the photoreceptor cells—together form a fused rhabdom (red), which receives light through a single facet lens. Right: In superposition eyes, several lenses focus light onto a rhabdom across the clear zone (CZ). [Enlarge image]

Left: In apposition eyes, ommatidia are optically isolated. The rhabdomeres—visual pigment-containing organelles of the photoreceptor cells—together form a fused rhabdom (red), which receives light through a single facet lens. Right: In superposition eyes, several lenses focus light onto a rhabdom across the clear zone (CZ). [Enlarge image]

The eye of the aquatic insect Notonecta glauca includes zones of preferential polarization absorption, dictated by variations in microvilli orientations of adjacent R7 and R8 polarization-sensitive photoreceptors across different zones in the compound eye. [E.J. Warrant, Phil. Trans. R. Soc. B 372, 20160063 (2016) / Adapted from T. Heinloth et al. Front. Cell. Neurosci. 12, 50 (2017), CC-BY 4.0] [Enlarge image]

The eye of the aquatic insect Notonecta glauca includes zones of preferential polarization absorption, dictated by variations in microvilli orientations of adjacent R7 and R8 polarization-sensitive photoreceptors across different zones in the compound eye. [E.J. Warrant, Phil. Trans. R. Soc. B 372, 20160063 (2016) / Adapted from T. Heinloth et al. Front. Cell. Neurosci. 12, 50 (2017), CC-BY 4.0] [Enlarge image]

Vision is central to a fly’s life; approximately 70% of its brain is dedicated to processing incoming visual data. Generally, flying insects have brains with 100,000 to 500,000 neurons and a mass of less than 1 mg (estimated from a volume of 0.08 mm3, assuming a dry weight of 0.25 mg). With an estimated 50-mW power demand, the brain consumes 10% of the animal’s total metabolic power. With that basic equipment, insects achieve image-processing speeds as high as 200 fps—about an order of magnitude faster than humans’ response to visual stimuli.

Clustered sensors and typologies

The construction of the eyes, the polarization and spectral properties of the photoreceptors, and the organization of neuronal processing strongly correlate with an organism’s lifestyle and habitat. In the apposition eyes of diurnal insects, like bees, the photoreceptors in an ommatidium strictly receive light only via their overlying facet lens. By contrast, in the optical-superposition eyes of nocturnal insects, like moths, a photoreceptor gathers light from up to hundreds of facets.

Furthermore, in many cases, eyes are regionalized. Male fly eyes have a specific area, the “love spot,” dedicated to detecting potential mates. Honeybee drones have specialized the main dorsal part of their eyes for spotting a honeybee queen as a contrasting dot in the sky. Ant and cricket eyes have a dorsal rim, dedicated to analyzing the polarization pattern of sky light. But the common backswimmer, Notonecta glauca, has ventral eye parts endowed with polarization vision.

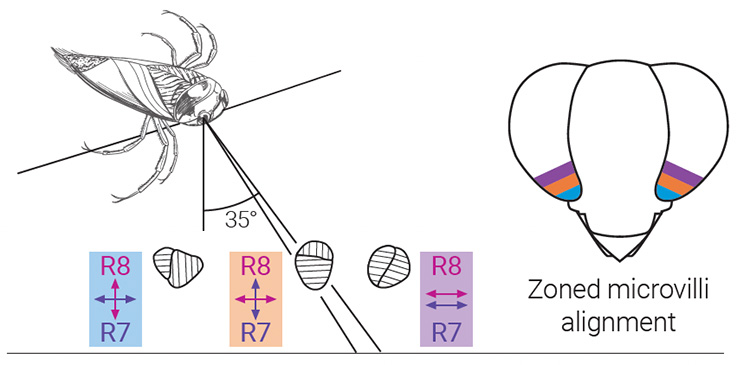

Corneal nanostructures in insects

Left: Daytime predator and carrion-seeking insects have random, shallow protrusions with spectral gratings. Right: Hovering butterflies and moths have ordered, raised corneal nanostructures. [Enlarge image]

Left: Daytime predator and carrion-seeking insects have random, shallow protrusions with spectral gratings. Right: Hovering butterflies and moths have ordered, raised corneal nanostructures. [Enlarge image]

Some butterfly and moth compound eyes have lenses coated with additional raised nanostructures. Those of the Mourning Cloak butterfly (shown here) are polycrystalline with random rotational order, and defects between crystals at grain boundaries. [W.C. Smith and J.F. Butler, J. Insect Physiol. 37, 287 (1991) / D.G. Stavenga et al. Proc. R. Soc. B 273, 661 (2005) / K. Lee et al. Sci. Rep. 6, 28342 (2016), CC-BY 4.0] [Enlarge image]

Some butterfly and moth compound eyes have lenses coated with additional raised nanostructures. Those of the Mourning Cloak butterfly (shown here) are polycrystalline with random rotational order, and defects between crystals at grain boundaries. [W.C. Smith and J.F. Butler, J. Insect Physiol. 37, 287 (1991) / D.G. Stavenga et al. Proc. R. Soc. B 273, 661 (2005) / K. Lee et al. Sci. Rep. 6, 28342 (2016), CC-BY 4.0] [Enlarge image]

The facet lenses of insect eyes are often covered with intricate nanostructures that vary from earwigs to bees to butterflies and moths. These nanostructures may offer antireflective and glare-reducing surfaces or provide anti-wetting hydrophobic surfaces to keep lenses clean.

Transmission electron microscopy identifies distinct optical patterns in the facet lenses of daytime predatorial insects and hovering insects. These are mostly random, flat patterns, but the facet lenses of horseflies and deer flies, scorpionflies, dragonflies, and long-legged flies carry 1D interferometric Fabry-Pérot spectral filters. Meanwhile, in moths and butterflies, polycrystalline lattices made up of corneal nanonipples exhibit more periodic patterns. Preliminary phylogenetic analyses and optical modeling of these structures suggest that daytime and nighttime insect structures differ in their optical function. Patterns on the insect eyes can exhibit multiple scales of order and photonic-crystal-like arrays.

Moving from lens to brain, several broad neural-circuit models of insect perception involving optical flow or polarization detection have been advanced. Reichardt detectors are neural-circuit models that rest on identifying motion. These include a low-signal-to-noise adaptation that provides an event signature—that is, a nonzero signal when an object passes with a time delay across adjacent inputs—and a high-signal-to-noise adaptation, providing more complex, differential-movement information. Higher-level memory, cognition, decision-making and sensorimotor responses build on these outputs in more complex neural systems that include circular networks.

Small-brain feed-forward neural circuits

Data flow from inputs at the top to correlation at the bottom, where inputs are signals from different photoreceptors.

Proposed Reichardt detectors, hypothetical neural circuits postulated for insect brain motion tracking. It is thought that processing switches from (left) event signals to (right) motion signals with an increasing signal-to-noise ratio. [Enlarge image]

Proposed Reichardt detectors, hypothetical neural circuits postulated for insect brain motion tracking. It is thought that processing switches from (left) event signals to (right) motion signals with an increasing signal-to-noise ratio. [Enlarge image]

Neural circuits associated with Tabanus bromius horseflies mix polarization and intensity signals, processing input from paired polarization-sensitive detectors (R7 and R8) in orthogonal horizontal and vertical microvilli, coupled with unpolarized signals from other photoreceptors (R1 to R6). [T. Haslwanter, Wikimedia Commons, CC-BY-SA 3.0 / A. Meglič et al. Proc. Natl. Acad. Sci. USA 116, 21843 (2019), CC-BY-NC-ND 4.0] [Enlarge image]

Neural circuits associated with Tabanus bromius horseflies mix polarization and intensity signals, processing input from paired polarization-sensitive detectors (R7 and R8) in orthogonal horizontal and vertical microvilli, coupled with unpolarized signals from other photoreceptors (R1 to R6). [T. Haslwanter, Wikimedia Commons, CC-BY-SA 3.0 / A. Meglič et al. Proc. Natl. Acad. Sci. USA 116, 21843 (2019), CC-BY-NC-ND 4.0] [Enlarge image]

Another broad neural-circuit model, studied in the horsefly Tabanus bromius, rests on combined polarization, spectral and intensity information. Specifically, the difference signals between orthogonally oriented microvilli are mixed with the difference between signal intensities from nonpolarized receptors. This mixture provides spatially encoded polarization information in a single output signal.

From fly vision to UAS vision

It’s interesting to note that, while there’s been substantial progress in implementing computer vision on small UAS for autonomous navigation and obstacle negotiation, the results of the algorithm-centered pipelines of UAS vision systems remain generally inferior to the evolved abilities of flying insects.

Conventional UAS pipelines process images from one or more cameras, and often the video data are composed of high-pixel-count, low-frame-rate image sequences. Subsequent frame-by-frame image processing predicts 3D movement in the environment by detecting and tracking features. Such a framework generally demands additional filtering to remove outliers and generate reliable 3D models. These real-time calculations—requiring throughputs of trillions of operations per second (TeraOPS)—are viable only with GPUs or specialized hardware designed for parallelized calculations.

In addition, such feature-detection algorithms often miss small, few-pixel-wide or pixel-sized objects, complicating aerial negotiation around cables, bare tree branches and netting. In photon-starved environments, a typical camera’s setting is limited to either a short integration time, resulting in noisy pixels, or a long integration time, resulting in motion blur—both of which adversely affect the performance of feature-detection algorithms. Dirt or condensation on camera lenses result in image degradation from blur or scattering that further compromises algorithm performance. And when a feature-detection algorithm fails for these or other reasons, the entire computer vision pipeline fails, rendering the UAS unsafe to operate.

The power of optical preprocessing

Schematic view of a dense, fully connected multilayer neural network used for image processing in which inputs are transformed to outputs via a learned model. [Enlarge image]

Schematic view of a dense, fully connected multilayer neural network used for image processing in which inputs are transformed to outputs via a learned model. [Enlarge image]

Preprocessing with optical encoders can provide spatial signal compression to minimize the model size, either for detecting features, for example for image classification (center), or for filtering noise, for example for image reconstruction (bottom). In both cases, the optical preprocessing results in a smaller model with reduced computational load. [Enlarge image]

Preprocessing with optical encoders can provide spatial signal compression to minimize the model size, either for detecting features, for example for image classification (center), or for filtering noise, for example for image reconstruction (bottom). In both cases, the optical preprocessing results in a smaller model with reduced computational load. [Enlarge image]

We have wondered if some key components of the fly’s snapshot reflexes might lie not only in its neural processing but also in its optics—and how those components might be applied to UAS vision systems that can operate with low size, weight and power (SWaP). In advanced integrated vision sensors, modules leverage optics that pair with vision chips and tailored processing units. The processor can be programmed with firmware to operate and acquire visual information in tightly integrated devices that are optimized for high performance and small size. In such a framework, optics can encode information in such a way as to flatten the computational needs associated with image processing. The general scheme involves optical preprocessing, sparse sampling and high-speed, shallow optimization, mimicking the neural-circuit models of insect vision in quick, feed-forward calculations.

The virtues of “small brains”

Rather than use high-resolution images and multiple layers (with relatively long optimization times), as in conventional UAS vision pipelines, integrated vision sensors modeled on insect vision, enabled by optical preprocessing, can focus on low-pixel-density images, coarse image reconstruction and quick, back-end calculations. This kind of system can be described as one having optically encoded signals that are decoded by a “small brain.” With machine-learned neural-network (NN) models, small brains (whether they are initialized as small or become small via brain pruning) can have faster inference speeds after training, lower storage requirements, minimized in-memory computations and reduced data-processing power costs. The disadvantage is that the small-brain NN system’s learned functions are highly dependent on training, meaning the outcomes are data- and task-driven (and thus generally less adaptable to truly novel situations).

Integrated vision sensors modeled on insect vision, enabled by optical preprocessing, can focus on low-pixel-density images, coarse image reconstruction and quick, back-end calculations.

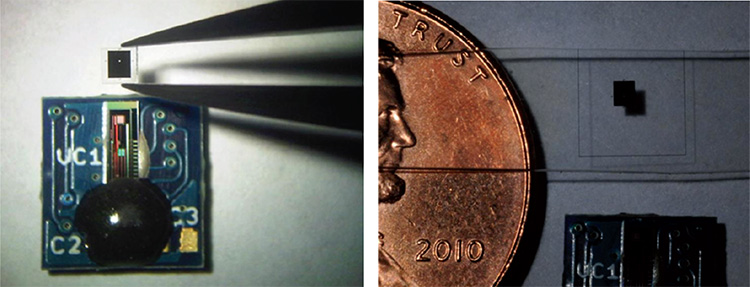

Such an approach has already been demonstrated using the simplest of all optical encoders—pinhole optical encoders. These diffractive encoders, which achieve a field of view of almost 180 degrees, offer high signal compression and coarse image reconstruction—potentially significant advantages for applications that prioritize high-speed reconstruction and lightweight hardware. Although the level of image coarseness from pinhole encoders prevents them from picking out details, they are capable of registering small obstacles in real time in a way that onboard high-definition cameras cannot.

Simple optical preprocessing with pinhole diffraction provides a 180-degree field of view. The printed pinhole (shown between forceps) is mounted on a Centeye 125-mg TinyTam 16×16 sensor. A US penny is provided for scale. [Courtesy of Centeye]

Simple optical preprocessing with pinhole diffraction provides a 180-degree field of view. The printed pinhole (shown between forceps) is mounted on a Centeye 125-mg TinyTam 16×16 sensor. A US penny is provided for scale. [Courtesy of Centeye]

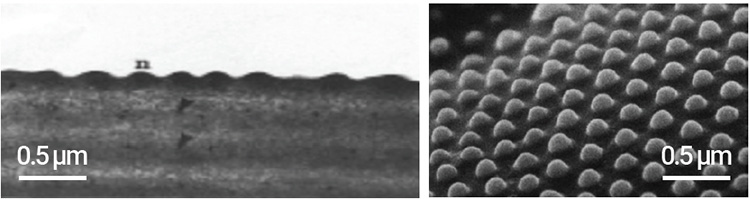

In future integrated vision sensors, preprocessing from corneal nanostructures may serve in ways that go beyond antiglare optical functions—a design approach that is commonly ignored by researchers implementing insect-vision-inspired UAS. A pipeline involving corneal nanostructures would be in the spirit of lensless diffusers and other encoded computational-imaging approaches employed in computer vision today (see “Losing the Lens,” OPN, July/August 2021). In the specific pipelines that involve sparse sampling and shallow, feed-forward processing, an insect-inspired model could offload significant computation costs to parallel optical preprocessing by corneal encoders.

In sum, the advantages of small-brain electronic decoding are speed and robustness—and, in a NN framework, lower training-data requirements. For certain technologies such as UAS, speed and robustness may be prioritized over high-resolution image reconstruction. Certainly, the fly’s evolutionary commitment to this approach has been successful.

A single-layer neural network (SNN) with additional optical preprocessing (in the form of a vortex lens encoder) enabled the robust classification of MNIST handwritten digits. At low noise levels (high SNR), classification accuracies are comparable to deep-learning convolutional neural networks (CNNs). At high noise levels (low SNR), the SNN with vortex encoders significantly outperforms the CNNs. Insets show noisy images without encoding. [B. Muminov and L.T. Vuong, Proc. SPIE 11388 (2020), doi: 10.1117/12.2558983] [Enlarge image]

A single-layer neural network (SNN) with additional optical preprocessing (in the form of a vortex lens encoder) enabled the robust classification of MNIST handwritten digits. At low noise levels (high SNR), classification accuracies are comparable to deep-learning convolutional neural networks (CNNs). At high noise levels (low SNR), the SNN with vortex encoders significantly outperforms the CNNs. Insets show noisy images without encoding. [B. Muminov and L.T. Vuong, Proc. SPIE 11388 (2020), doi: 10.1117/12.2558983] [Enlarge image]

In work over the past several years, we have attempted to quantify some of those benefits. In our experiments, vortex encoders were used in front of a lens, and linear intensity pixels were captured in the Fourier plane, which spatially compresses the intensity pattern. This pattern was then fed to a shallow single- and dual-layer NN—composed of linear and nonlinear activation functions—which was found to stably achieve image contrast.

We found that in our small-brain setup, when noise was added in the image and in the optically encoded sensor plane measurements, the image contrast remained, although parts of images disappeared. On the other hand, using a more complex, deep-learning convolutional NN under similar noise conditions, the reconstructed images became almost unrecognizable. In other words, the tradeoff of having a model that produces lower-fidelity images in the absence of noise is robustness and reliable performance in the presence of noise.

Meanwhile, nonlinearities in the form of logarithmic responses can give a sensor the ability to operate over a wide dynamic range of lighting conditions. An insect flying through a forest in daylight may simultaneously observe a bright sunlit patch of ground and a nearby deep-shadowed area with a radiance three orders of magnitude lower. The logarithmic response at a front-end sensor means it can easily capture pixels embodying environmental information at a range of ambient light levels.

Toward insect-vision-inspired flight control

How far can we take the insect vision model? Whether low-resolution vision systems can be used to guide a flying machine has been studied extensively. Early experiments, published in 2000, used a single, downward-aimed optical flow sensor to provide a fixed-wing aircraft with autonomous altitude hold while flying forward. The biologically inspired optical flow algorithm relied on input from 18 rectangular logarithmic-response pixels and analog image-processing circuitry, with processing performed with an 8-bit microcontroller running at about 1.5 million operations per second (MOPS)—and acquired imagery at a 1.4-kHz frame rate. Later implementations, involving an 88-pixel analog neuromorphic-vision sensor chip operated by a 10-MOPS microcontroller, achieved a high degree of reliability, including holding altitude over snow on a cloudy day.

Like a compound eye, sensor arrays may support obstacle avoidance using optical flow algorithms based on fruit flies. In experiments reported in 2002, three 88-pixel optical flow sensors aimed in the forward-left, forward and forward-right directions were able to trigger sharp execution of fixed-sized turns based on the difference in optical flow between the sensors. In 2012, vision-based hover-in-place via omnidirectional optical flow sensors was demonstrated with an 18-cm-wide coaxial helicopter. In 2010, a ring of eight vision chips acquiring a total of 512 rectangular pixels (half horizontal and half vertical) grabbed eight optical flow measurements around the yaw-plane that were used to estimate position based on a model of global optical flow processing neurons found in the blowfly. A few years later, a 250-pixel setup enabled similar behavior—in an implementation weighing only 3 grams that included the eight vision chips, optics, interconnects and an 8-bit microcontroller running at 60 MOPS.

As these examples demonstrate, useful vision-based flight control can be performed with integrated visual circuits involving sensors with hundreds or thousands instead of millions of pixels—and at data throughputs many orders of magnitude smaller than the TeraOPS levels of conventional UAS vision systems that require sophisticated GPUs. In the work we briefly surveyed above, no GPS, motion capture, external computation or position systems were used. We speculate that with the added front-end processing provided by optical encoders—again, biospeculative optical preprocessing achieved by insect eyes—more advanced behaviors may be supported by these low-SWaP systems.

The psychologist William James wrote that “the art of being wise is the art of knowing what to overlook.” Insect vision embodies this philosophy.

When less is more

The psychologist William James wrote that “the art of being wise is the art of knowing what to overlook.” Insect vision embodies this philosophy. To close this article, we offer some examples of what the fly has “learned to overlook”—with lessons for those designing machine vision systems:

Rely less on more distortable information. Insects see in the UV, which provides greater image contrast and is less vulnerable to environmental and sensor thermal noise than the IR.

Capture more multidimensional information with fewer pixels. Insects sample sparsely and leverage polarization, which enables greater higher-level inferences.

Encode sensor data to capture more information. The seemingly random domains of corneal nanostructures could spatially disperse data and provide the capacity to collect, filter, process and map more visual data.

Reduce computational complexity. Insects leverage shallow computation and feed-forward algorithms to make rapid decisions.

Do not assume more is better. Coarse, high-frame-rate image processing offers robustness in variable natural environments.

Luat T. Vuong is with the University of California Riverside, Riverside, CA, USA. Doekele G. Stavenga is with the University of Groningen, Netherlands. Geoffrey L. Barrows is with Centeye in Washington, DC, USA.

References and Resources

-

G.L. Barrows and C. Neely. “Mixed-mode VLSI optic flow sensors for in-flight control of a micro air vehicle,” in Critical Technologies for the Future of Computing, SPIE Proc. 4109, 52 (2000).

-

G.L. Barrows et al. “Biologically inspired visual sensing and flight control,” Aeronaut. J. 107, 159 (2003).

-

D.G. Stavenga et al. “Light on the moth-eye corneal nipple array of butterflies,” Proc. R. Soc. B 273, 661 (2005).

-

A. Borst. “Drosophila’s View on Insect Vision,” Curr. Biol. 19, R36 (2009).

-

H. Haberkern and V. Jayaraman. “Studying small brains to understand the building blocks of cognition,” Curr. Opin. Neurobiol. 37, 59 (2016).

-

D.G. Stavenga et al. “Photoreceptor spectral tuning by colorful, multilayered facet lenses in long-legged fly eyes (Dolichopodidae),” J. Comp. Physiol. A 203, 23 (2017).

-

E.J. Warrant. “The remarkable visual capacities of nocturnal insects: vision at the limits with small eyes and tiny brains,” Phil. Trans. R. Soc. B 372, 20160063 (2017).

-

B. Muminov and L.T. Vuong “Fourier optical preprocessing in lieu of deep learning,” Optica 7, 1079 (2020).

-

C.J. van der Kooi et al. “Evolution of insect color vision: From spectral sensitivity to visual ecology,” Annu. Rev. Entomol. 66, 435 (2021).

-

T. Wang et al. “Image sensing with multilayer nonlinear optical neural networks,” Nat. Photonics 17, 408 (2023).

For complete references and resources, visit: optica-opn.org/link/1123-insect-vision.