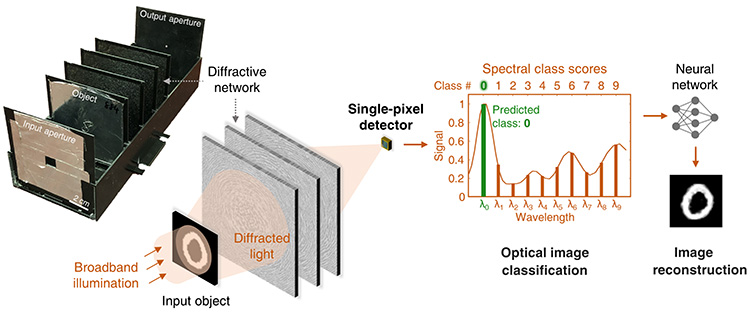

A diffractive optical network encodes the spatial information of objects into the spectrum of the diffracted light, to classify input objects and reconstruct their images using the output spectrum detected through a single-pixel.

A diffractive optical network encodes the spatial information of objects into the spectrum of the diffracted light, to classify input objects and reconstruct their images using the output spectrum detected through a single-pixel.

Machine vision systems powered by artificial intelligence present exciting opportunities in autonomous vehicles, robotics, biomedicine, defense/security and other areas. Conventional systems often use lens-based cameras with high-pixel-count image sensors to digitize a scene, which is then passed to a digital processor. This workflow hinders throughput. Furthermore, high-pixel-count image sensors are not readily available in different parts of the electromagnetic spectrum, such as the infrared and THz bands.

We recently reported a single-pixel machine vision framework,1 based on a series of diffractive surfaces designed using deep learning, to perform a desired machine-learning task as the input light diffracts through the network of these spatially engineered surfaces.2 Broadband light illuminates the unknown object to be classified, and the diffractive surfaces encode the spatial information of the object features into the power spectrum of the diffracted light. A single-pixel detector then collects the light at the output of the diffractive network.

Under the system, different object types or data classes are uniquely assigned to different wavelengths. Unknown input objects are automatically classified all-optically, using the output spectrum detected through a single-pixel—that is, based on the maximum optical signal within the predefined wavelengths that represent object types.

We demonstrated use of this framework at THz wavelengths by classifying the images of handwritten digits using a single-pixel detector and three diffractive layers that were jointly optimized using deep learning.1 Despite using a single pixel, we achieved all-optical classification of handwritten digits with more than 95% accuracy based on the maximum spectral power at 10 distinct wavelengths, each assigned to one digit.

An experimental proof-of-concept using 3D-printed diffractive layers and a plasmonic nanoantenna-based THz time-domain spectroscopy setup showed a close agreement with our numerical results.1 This demonstrated the efficacy of these networks for all-optical classification of previously unseen input objects—without the need for an image sensor-array, a digital processor or external power, except for the illumination light.

In addition to performing all-optical image classification, we also trained a shallow artificial neural network composed of two hidden layers (electronic) to rapidly reconstruct the images of unknown input objects using only the detected power at 10 distinct wavelengths assigned to represent 10 digits. Since each reconstructed image includes approximately 800 pixels, this reconstruction demonstrates a task-specific image decompression, following an all-optical classification event through a single pixel.1

This framework, in our view, could provide transformative advantages in frame rate, memory requirement and power consumption, which are especially important for mobile computing applications. We believe that spectral encoding of spatial information using deep-learning-designed diffractive networks could enable low-latency, low-power and high-frame-rate machine vision systems at different parts of the electromagnetic spectrum in a resource-efficient manner.

Researchers

Jingxi Li, Deniz Mengu, Nezih T. Yardimci, Yi Luo, Xurong Li, Muhammed Veli, Yair Rivenson, Mona Jarrahi and Aydogan Ozcan, University of California, Los Angeles, CA, USA

References

1. J. Li et al. Sci. Adv. 7, eabd7690 (2021).

2. X. Lin et al. Science 361, 1004 (2018).