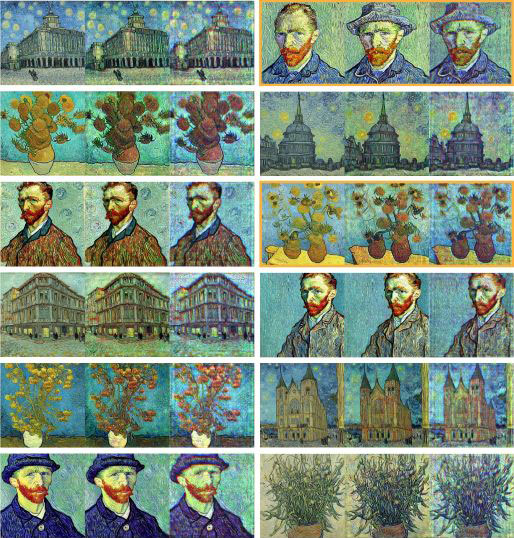

An optical generative model can create diverse versions of colorful artworks in a single processing step. [Image: Ozcan Lab/UCLA]

Researchers at the University of California, Los Angeles (UCLA), USA, have devised an optical computing strategy that creates novel images and videos using much less energy than conventional models for generative artificial intelligence (AI) (Nature, doi: 10.1038/s41586-025-09446-5). Delivering a comparable performance to image generators that rely on digital electronics, the light-based scheme offers an energy-efficient approach for deploying powerful AI models in real-world applications.

Generative AI at scale

The UCLA researchers were inspired by current diffusion models for generative AI, which work by adding random noise to a set of real data and then removing the noise to produce new content that resembles the training dataset. Digital diffusion models operate iteratively, gradually reducing the noise over hundreds or thousands of processing steps, which consumes vast amounts of computational energy and limits their speed for generating visual content in real time.

In contrast, the model developed by the UCLA team requires only a single snapshot to generate a new image that follows the desired data distribution. To start with, a digital encoder is used to rapidly transform random noise patterns into two-dimensional phase structures that act as seeds for the generative process. One of these phase maps is then projected onto a spatial light modulator, which when illuminated with laser light produces a light field encoded with the phase information. Passing this optical field through a diffractive structure enables the phase pattern to be decoded, creating an entirely new image that is captured on a sensor array.

In one experiment, the optical network created original versions of colorful artworks inspired by Vincent van Gogh, producing outputs that were even more diverse than those generated by a digital diffusion model requiring 1,000 iterations.

To validate their approach, the researchers showed that the optical model could generate novel images from various datasets, including handwritten digits, butterflies and human faces, with a similar quality to traditional AI models. In one experiment, the optical network created original versions of colorful artworks inspired by Vincent van Gogh, producing outputs that were even more diverse than those generated by a digital diffusion model requiring 1,000 iterations.

“Our work shows that optics can be harnessed to perform generative AI tasks at scale,” said lead author Aydogan Ozcan. “Models like ours open the door to snapshot, energy-efficient AI systems that could transform everyday technologies.”

Toward an all-optical image generator

In a further advance, the researchers developed an iterative framework that repeats the decoding process up to five times. This iterative process yields higher-quality images with clearer backgrounds and in the future could enable the development of an all-optical image generator.

For this proof-of-concept study the researchers exploited a free-space optical architecture, but a much smaller solution could be created by implementing the same framework on a photonic chip. If that can be achieved, such optical generative models could prove particularly valuable for applications that require rapid processing of visual information, such as augmented and virtual reality.