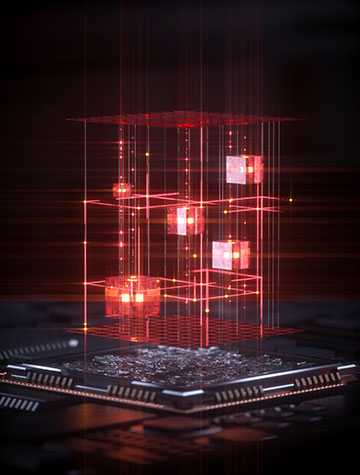

While humans and classical computers must perform tensor operations step by step, light can do them all at once. [Image: Photonics group / Aalto University]

Optical computing is an emerging platform for the computation and acceleration of neural networks that drive artificial intelligence technology. Advantages over traditional computing platforms include large bandwidth, high parallelism and low energy consumption.

However, a major drawback is that tensor processing—a key operation in neural network computations—typically requires multiple light propagations. Now, researchers in China and Finland have demonstrated an approach that enables fully parallel tensor processing through a single coherent light propagation (Nat. Photonics, doi: 10.1038/s41566-025-01799-7).

“This approach can be implemented on almost any optical platform,” said study author Zhipei Sun, Aalto University, Finland, in a press release accompanying the research. “In the future, we plan to integrate this computational framework directly onto photonic chips, enabling light-based processors to perform complex AI tasks with extremely low power consumption.”

At the speed of light

Optical neural network implementations offer theoretically improved efficiency and versatility in tensor processing.

Tensor processing in traditional computing is most often implemented through matrix–matrix multiplication on graphics processing unit (GPU) tensor cores. Major challenges include large memory bandwidth demands, substantial power consumption and inefficient use of tensor core resources. Optical neural network implementations offer theoretically improved efficiency and versatility in tensor processing.

“Our method performs the same kinds of operations that today’s GPUs handle, like convolutions and attention layers, but does them all at the speed of light,” said study author Yufeng Zhang, Aalto University. “Instead of relying on electronic circuits, we use the physical properties of light to perform many computations simultaneously.”

Sun, Zhang and their colleagues performed both theoretical simulations and experimental demonstrations of parallel optical matrix–matrix multiplication. A proof-of-concept prototype used conventional optical components—including a 532-nm solid-state continuous-wave laser, amplitude and phase spatial light modulators and cylindrical lens assemblies—to highlight its functionality and compatibility.

An optical neural network

Notably, the entire tensor operation is fully parallel, with a single shot generating all values simultaneously. The physical optical prototype showed strong consistency with standard GPU-based matrix–matrix multiplication over various input matrix scales. Lastly, the researchers developed a GPU-compatible optical neural network framework to demonstrate the direct optical deployment of different GPU-based neural network architectures, including convolutional neural networks and vision transformer networks.

“This will create a new generation of optical computing systems, significantly accelerating complex AI tasks across a myriad of fields,” said Zhang.