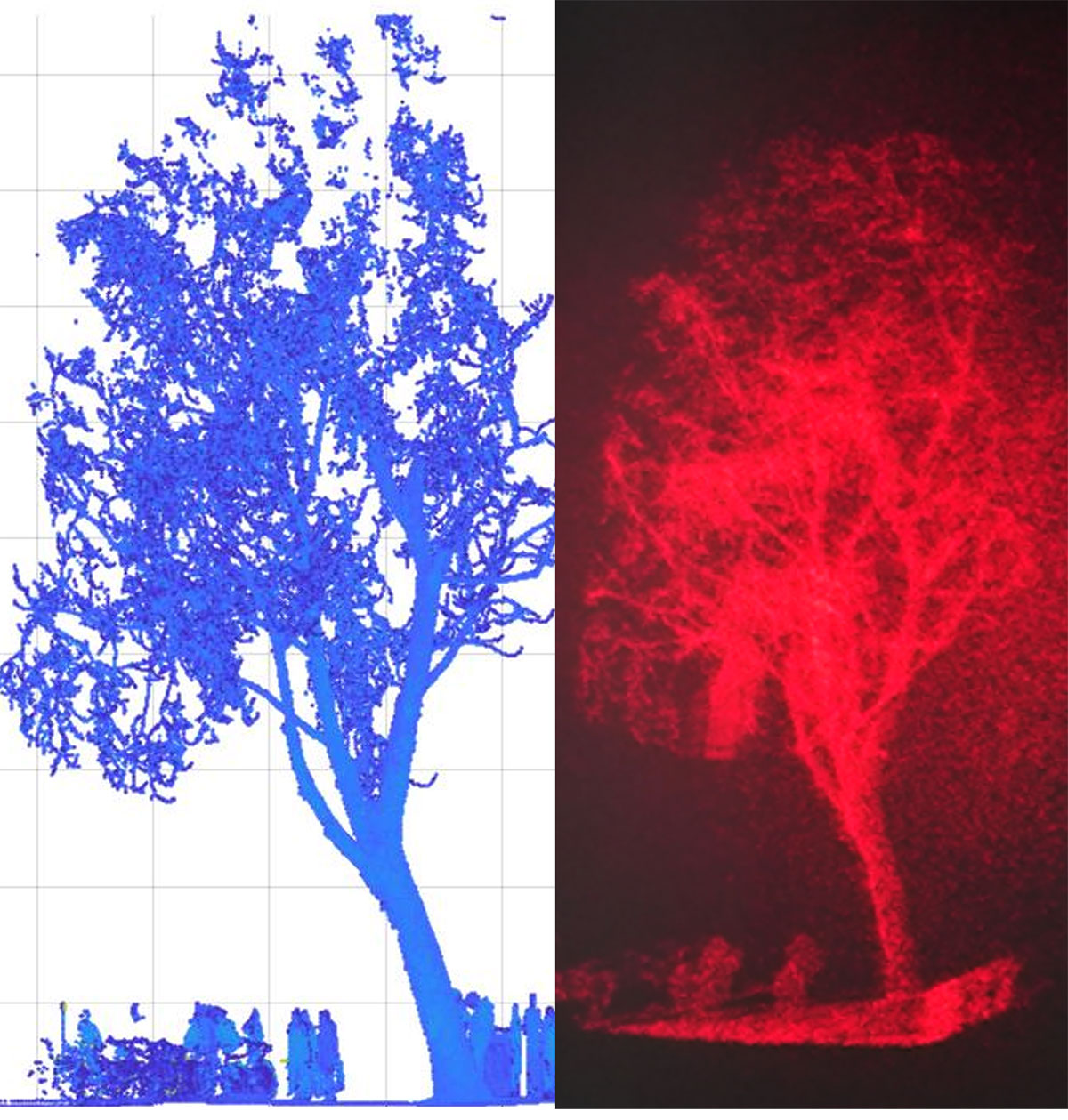

Researchers in the UK have developed a new head-up display for car windshields that could reduce accidents by representing objects on the road ahead, picked up by lidar sensing, as 3D holograms within the driver’s field of view. The image on the left shows the lidar point cloud; on the right is the reconstructed 3D view. [Image: Jana Skirnewskaja]

Displaying graphical information on windshields to aid drivers is nothing new. But such “head-up” devices tend to operate only in 2D and over small areas. That means people often have to switch focus between the display and the road ahead.

Now researchers in the UK reckon it should be possible for drivers to pick out hidden obstacles in front of them while remaining focused on the road (Adv. Optical Mater., doi: 10.1002/adom.202301772). They have shown how a combination of light detection and ranging (lidar) and computer-generated holography could place 3D representations of such objects at the right point in a person's field of view and with the correct apparent size—all in real time.

The quest for distraction-free AR

Such displays are examples of so-called augmented reality (AR). Rather than immersing the user in a completely artificial, computer-generated world, AR technology instead supplements sensory inputs from the real world with additional data. This approach has already been applied widely, in everything from education to surgery. But in the case of driving, it carries the risk that the overlaid information distracts the user from the main task in hand.

To get around that issue, a number of research groups have attempted to develop 3D head-up systems, some of which rely on holography. Conventional holograms are created by physically interfering an object beam with a reference beam, whereas those used in AR are instead generated computationally, taking light or other waves bounced off objects in the real world and then calculating the interference pattern that they would generate when combined with a reference beam. The trick is being able to carry out the calculations quickly and accurately enough that they can capture the ever-changing environment of a moving vehicle.

Holograms via lidar

In the latest work, Jana Skirnewskaja and co-workers at Cambridge University, alongside colleagues from Oxford University and University College London, UK, have shown how such holograms can be created via lidar (which measures the distance to an object by bouncing laser light off it). Using clouds of well-chosen lidar data points, the researchers found that they could reconstruct 360-degree views of objects even when collecting relatively little data in single laser scans.

Using clouds of well-chosen lidar data points, the researchers found that they could reconstruct 360-degree views of objects even when collecting relatively little data in single laser scans.

As Skirnewskaja explains, each point has its own spatial coordinates and intensity level. Given enough points, it is possible to reconstruct objects in 3D even if they are only partly visible to the moving vehicle.

The researchers used a terrestrial lidar scanner to record point clouds from a number of objects—including a tree, a truck and pedestrians standing next to bicycles—along Malet Street, a road near University College London. They then created the hologram in two steps. First, the superposition of the light waves emanating from the points within a virtual plane was calculated. Then, the physics of diffraction was used to work out a holographic plane from the virtual one.

Reconstructing the scene

To reconstruct the observed scene from the hologram, Skirnewskaja and colleagues shined light from a helium-neon laser at a liquid-crystal display, causing the crystal’s birefringent molecules to align according to a specific arrangement of electric fields determined by the hologram’s interference pattern. They were able to place the reconstructed objects at the correct distance within the driver’s field of view using “virtual Fresnel lenses”—lines of computer code that mimic the properties of actual lenses, thereby saving space within the vehicle.

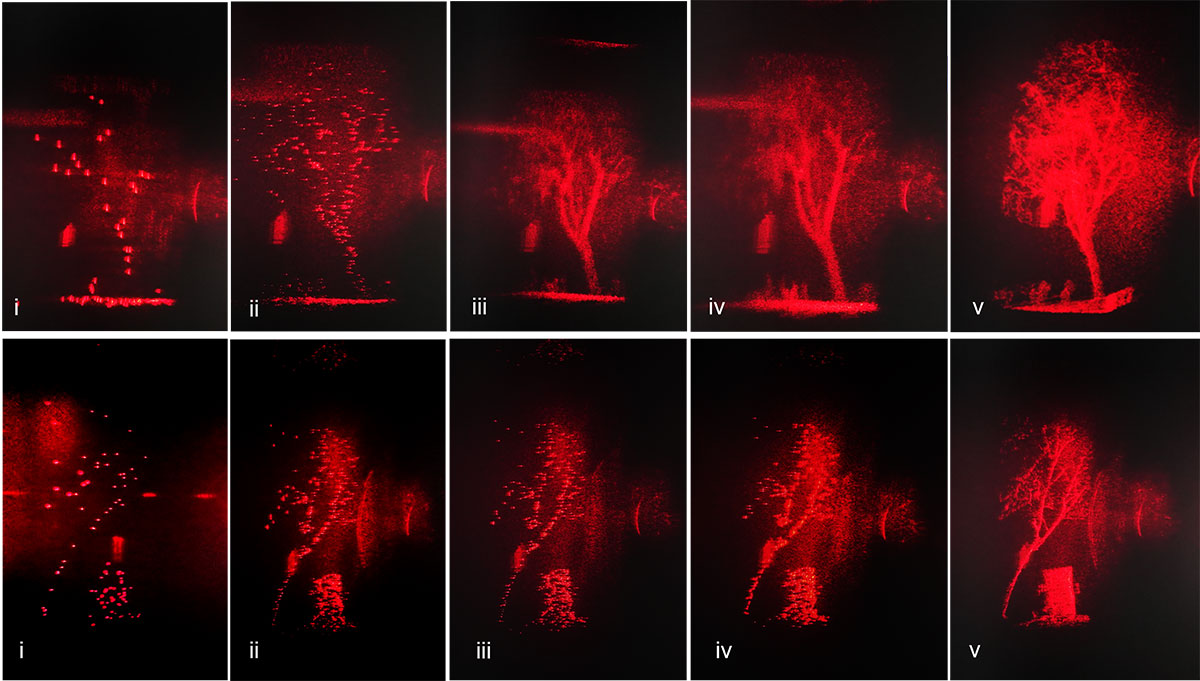

The researchers recorded the holograms using point clouds in sizes ranging from 10 points to 400,000. They also processed the data using both standard computer central processing units (CPUs) and more powerful graphics processing units (GPUs). They found that the parallel processing made possible by GPUs allowed them to produce higher-quality holographic images in relatively little extra time—12 seconds were required to process a hundred points but just 26 seconds were needed to do the same thing with a thousand.

A quick view first, more info later

The researchers believe their holographic system could enable both an initial, quick-response object view based on a small number of lidar points (left-hand examples) and a more thorough assessment some seconds later based on a larger number of points. [Image: Jana Skirnewskaja]

Given the need for speed in moving vehicles, the team suggests that a driver could first be alerted to the presence of potentially significant hidden objects by initially using lidar with only 25 points to create a silhouette of an object after about five seconds. A 1,000-point hologram could then be created 21 seconds later, yielding a 360° view of the object and allowing the driver to establish, for example, how long a given truck is.

Skirnewskaja says that since carrying out the tests, she and her colleagues have refined the technology, showing how to project the hidden obstacles in full color with red-green-blue fiber lasers. The plan, she explains, is to test a display in a real car at some point in 2024, working with Google to tailor the system for people with different eye conditions. But she adds that further refinements will be needed before the displays are truly roadworthy, including combining the lidar scanner with cameras so as to pick out the writing on road signs as well as re-creating objects’ silhouettes.