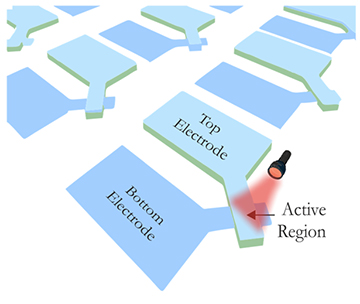

Researchers at the universities of Oxford and Exeter, UK, and IBM Research–Europe, Switzerland, have prototyped an optically controllable memristor device that they believe could be superior to standard memristors in AI tasks such as reinforcement learning. [Image: S.G. Sarwat et al. Nat. Commun. doi: 10.1038/s41467-022-29870-9 (2022); CC-BY 4.0]

In the quest to make machine-learning systems faster and more energy-efficient, one intriguing approach involves multilayer neural networks built from banks of so-called memristors. These are electronic devices that can both store a value through a change in conductance, and use that same value to execute in-memory computations, potentially saving on computational overhead.

But such devices, and artificial neural networks made from them, still lie a fair distance from how biological neurons and brains actually work. That’s particularly true for multifactor mechanisms like reinforcement learning, the scheme by which humans (and machines) learn complex tasks through positive rewards and negative feedback.

Researchers in the United Kingdom and Switzerland have now created a proof-of-principle “optomemristor” that expands the functionality of electronic memristors by adding an optical signal to the mix (Nat. Commun., doi: 10.1038/s41467-022-29870-9). Doing so, the team reports, creates a device that’s closer in spirit to the workings of real biological neurons, and that could extend the capabilities for multifactor processing and reinforcement learning in memristor-based AI systems.

The approach “provides an additional control knob on these emerging devices, electronic memristors,” says Syed Ghazi Sarwat, the study’s lead author, who now works at IBM Research– Europe, Switzerland. “That additional knob is light—the optical stimuli.” As a demonstration of what such an extra control knob can enable, the team used its optomemristors to train an artificial rodent to navigate a maze. The team was also able to implement a historically difficult computational problem in AI—the so-called Exclusive Or (XOR) logic gate—with a single artificial neuron.

Improving artificial neurons

One driver for memristor-based systems has been the need to reduce the energy requirements of deep-neural-net computing on CMOS-based computer systems. Such systems require that data be picked up from a separate storage location and shunted to a central processing unit (CPU) or graphics processing unit (GPU) for operation—a power-hungry step.

One driver for memristor-based systems has been the need to reduce the energy requirements of deep-neural-net computing on CMOS-based computer systems.

Memristor-based systems, by contrast, make it possible to execute computations where the data are stored, removing that extra computational overhead. Indeed, in 2020, a research team in China demonstrated memristor-based artificial-neural-net computing that reportedly had an energy efficiency two orders of magnitude greater than state-of-the-art GPUs.

Such systems using “in-memory computing” still involve networks comprising multiple layers of artificial neurons to handle complex learning and processing tasks. But in recent years, studies in neuroscience have also uncovered the rich learning potential embodied in single, individual biological neurons, which can integrate multiple signals and evolve physically in response to rewards and discouragements.

Building such multifactor capability directly into a neuromorphic device, the team behind the new work writes, would thus “be highly impactful.” And optics, it appears, could offer one way to do so.

Optical control knob

To test out a device that could support this multifactor capability by adding an optical control knob, the team fabricated thin-film memristors with a 15-nm-thick top silver or platinum electrode and a 60-nm-thick bottom silver electrode that meet in a crossbar region. In-between the electrodes at the crossbar, the team sandwiched a 36-nm-thick layer of insulating GeSe3.

In its role as a basic electronic memristor, the device’s resistivity is controlled using an applied electric field, which causes conductive channels to form in the GeSe3 between the two electrodes. However, the team also found that it could tweak the resistivity by shining light from a 637-nm, 1 mW laser on the device. The light, it seems—much as in a solar cell—gives rise to a photocurrent within the device that affects the electrical properties.

Moreover, the researchers found that the design of the memristors—which, they write, “behave as resonating optical nano-cavities”—enabled light wavelength, absorption and other parameters to be adjusted merely by changing the thicknesses of the various layers. And the combination of optical and electrical control, the team concludes, adds “a range of additional, advanced functionalities” related to switching dynamics and other features.

Maze runner

To test out these optically souped-up memristors, the team put them to work in several neuromorphic-computing tasks. One was a classic scenario taken from neurobiology: the training of a mouse to run through a maze and gain a tasty cheese reward at the end.

Reinforcement learning using optomemristors. Left: A representation of the biophysical mechanisms of the rodent’s navigation system. The directions are learned using reward-based three-factor plasticity in the optomemristive synapses. Right: Mapping the electrical signals to a global reward, and optical signals to local neural activity, allows an artificial neural network to learn to navigate the path to the cheese through the maze. [Image: S.G. Sarwat and T. Moraitis] [Enlarge image]

In the team’s artificial version, the optomemristors represent individual neuronal synapses encoding various potential decisions at each turn of the maze. The optical signal serves as an “eligibility flag,” which is tripped when the mouse makes a given turn in the maze, and which makes the neuron eligible for an update if it receives a subsequent electrical reward signal.

If the “rodent” finds the “cheese” in this artificial maze within a specific time window, an electrical reinforcement signal is sent out to all of the artificial neurons—but only those pre-activated by the light signal will be updated, thereby reinforcing the wiring that encodes the correct path to the cheese. (Lead author Sarwat likens this to the impact of the neurotransmitter dopamine, which provides a global reward signal to reinforce a specific behavior.)

A single-neuron XOR gate

In another display of the device’s capabilities, the team used a single optomemristor neuron to implement an XOR logic gate, an operation that embeds both linear and nonlinear steps. That’s a problem of considerable resonance in the history of AI. It was the inability of early, late-1950s versions of artificial neurons—so-called perceptrons—to solve the XOR problem that tripped up the artificial-intelligence field, leading to the “AI winter” that lasted from the 1950s to the 1990s. The AI winter only turned to spring again with the development of multilayer, deep neural networks that could solve the XOR problem by computationally separating its linear and nonlinear parts.

According to the team behind the new work, however, the ability of its optomemristors to integrate both electrical and optical signals means that they can implement an XOR gate in a single artificial neuron, rather than requiring a multilayer artificial neural net. It’s recently been shown that individual biological neurons, too, can implement XOR, through nonlinear operations at the neuronal structures called dendrites—so-called “dendritic computing.”

A better model of real neurons?

Sarwat—who performed part of this work while a grad student at the University of Oxford, UK—stresses that it is “a proof of principle demonstration of what can be done by adding more functionality into a single device … It’s not system-level work; we are very far from that.” Nonetheless, he has a few ideas of what such devices might someday enable.

“To be able to get closer to how the brain functions, one needs better models of the neurons,” Sarwat says.

One interesting possibility, he notes, is that the optomemristors, by providing a better model of how single neurons and reward systems actually operate in the brain, could offer a platform for implementing some computational models of neuroscience. That, he suggests, could help enable a better understanding of how the brain really works.

“To be able to get closer to how the brain functions, one needs better models of the neurons,” Sarwat says. “One can take a brute-force approach, which is what is happening right now. Or one can devise hardware that is very near to how a biological neuron would function.”

Sarwat also sees potential for these devices to advance solutions to complex problems in machine learning and reinforcement learning in particular. Here, however, he stresses that the team has a lot of work to do in scaling the devices before getting to a system that’s ready for prime time. He notes, for example, that the size of the memristors, at “a few tens of nanometers,” is far below the diffraction limit of light, and that combining the optical system and the electronic one on a single chip is “another challenge we are looking into.”

Still, he believes the work is well worth doing. “What we have shown here are two examples” of how the optomemristors could work in AI systems, Sarwat told OPN. “But there are multiple machine-learning algorithms that can be made more efficient. And we’re looking into it.”