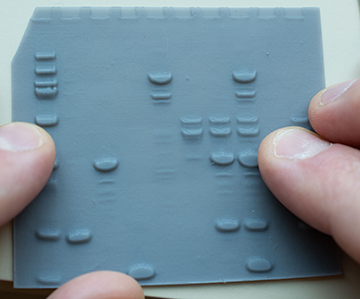

A 3D-printed lithophane of a protein-separation gel. The object can be interpreted by touch by scientists who are blind, but interpreted by sighted persons as an ordinary visual image when backlit. [Image: E. Shaw]

In the pursuit of science, the need to interact and engage with graphs, images and other visual data puts blind and low-vision persons at a distinct disadvantage. Now, a team led by Bryan Shaw, a chemistry professor at Baylor University, USA, has combined light-driven 3D printing and optical principles to come up with what his team describes as a “universally visualizable data format” accessible to both blind and sighted scientists (Sci. Adv., doi: 10.1126/sciadv.abq2640).

The team’s approach relies on an art form, lithophane, that has been around for at least 200 years. And, because of that art form’s special properties, the technique can turn modern data—ranging from protein-separation gel tracks in the chemistry lab to high-resolution photomicrographs of scales on an insect’s wing—into graphics that scientists who are blind can read by touch, but that glow with quasi-video resolution when illuminated from behind.

The work, Shaw noted in a press release accompanying the research, shows that these thin, translucent lithophanes, reimagined as a vehicle for scientific information, “can make all of this imagery accessible to everyone regardless of eyesight. As we like to say, ‘data for all.’”

An old technique

A front-lit lithophane (left) shows a pattern of etchings in the thin porcelain or plastic surface. When backlit (right), differential scattering and transport of light through thick and thin areas allows a detailed image to emerge. [Image: E. Wetzig/Wikimedia Commons, CC-SA 3.0] [Enlarge image]

Lithophane became popular in western Europe in the 1820s, though its roots may stretch back nearly a thousand years to China. In a classic lithophane, a piece of thin, translucent porcelain or plastic is carefully inscribed with a 3D pattern or picture. When illuminated from the front, in ambient light, the pattern is only vaguely suggested. But when backlit, the differential scattering and transport of light through relatively thin or thicker parts of the porcelain or plastic turn the lithophane into a glowing, photorealistic grayscale image.

In recent years, the availability of commercial 3D printers has led to something of a revival for the lithophane technique. The internet even offers freely available software that can convert a grayscale image into a so-called heightmap—a sort of topographic map of the image brightness—that can then be fed into a computer numerical control (CNC) milling machine or a 3D printer to create a functional lithophane.

Bringing lithophanes to science

Shaw’s team thought the combination of the lithophane technique and 3D printing might allow creation of scientific images or data objects that could be decoded simultaneously by both blind and sighted persons. So the researchers designed a set of experiments to put that idea to the test.

The effort builds on a long-standing interest in the lab in improving the accessibility of science for visually impaired persons—particularly children and young adults. These efforts have sometimes taken unusual forms. In a study published last year, for example, Shaw’s team used injection molding to create bite-sized models of protein molecules—analogous to “gummy bears”—that students could put in their mouths to interpret the protein structure by mouth feel. Because of the surprisingly fine tactile sensing of the human mouth, students in the experiment—whether blind or sighted—were able to recognize the correct structure solely by mouth feel with an average accuracy of more than 85%.

While injection molding was used to create the gummy-bear protein molecules, the team on the lithophane project turned to another form of 3D printing: stereolithography. In this approach, a UV laser or patterned light is applied in a preprogrammed 2D design to a vat of photopolymer resin. The light causes the molecules in the resin to cross-link, forming polymers and leading to a thin, solidified layer matching the desired pattern. Multiple such 2D rounds build up the full 3D solid, layer by layer.

Shaw’s team executed its lithophane study using a commercially available 3D printer from the company Formlabs, costing less than US$5,000. In this case, stereolithography “works great because it gives us reproducible high-res prints in different types of materials,” Shaw told OPN. “And anybody can do it.”

A test with real data

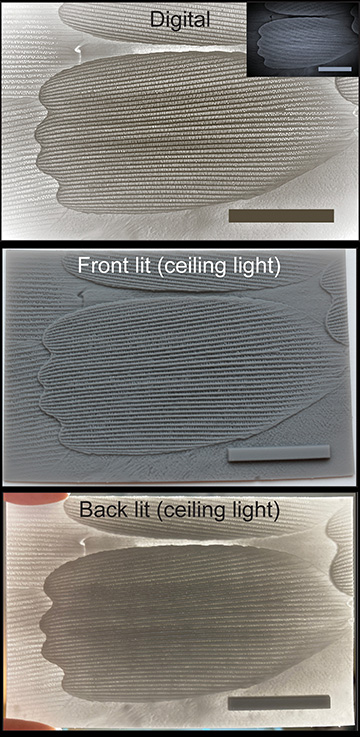

One of the scientific images used in the experiment was a scanning electron micrograph (top) of a chitin scale from a butterfly’s wing. The lithophane offers a tactile rendering (middle) that re-emerges as a monochrome image when backlit (bottom). Scale bar: 40 µm. [Image: J.C. Koone et al., Sci. Adv., doi: 10.1126/sciadv.abq2640 (2022); CC-NC 4.0]

To test the use of lithophanes as a vehicle for scientific information for both blind and sighted persons, the team began by assembling digital images of five sample data types: SDS–polyacrylamide gel electrophoresis data tracks, common in biochemistry studies; an electron micrograph of a chitin scale from a butterfly; a mass spectrum of a protein molecule; a UV–visible spectrum of another protein; and a textbook illustration of a protein-topology diagram.

The team took the five data images and fed them into an open-source image-to-lithophane routine, and then used the resulting stereolithography files to print 3D lithophanes of the data. In these tests, each lithophane was approximately the size of a typical ID card or credit card.

With lithophanes of the scientific data literally in hand, a cohort of 106 sighted persons with blindfolds and five blind persons (four of them with a Ph.D. in chemistry) were tasked with interpreting the data by touch. Meanwhile, a separate cohort of 106 sighted persons attempted to interpret the lithophanes in back-lit form, as monochrome visible images.

The team found that the average test accuracy for blind individuals, interpreting the data by touch, was nearly 97%—compared with an accuracy of just over 92% for sighted individuals interpreting the back-lit lithophanes visually. In tactile tests by blindfolded, sighted individuals, test accuracy was around 80%.

Opportunities beyond science

The team also benchmarked its technique against another common method for converting visual images into tactile renderings—“Picture in a Flash,” which uses specialized paper that selectively swells in printed areas when exposed to heat. The researchers found that in rendering the scientific data samples, the swelled-paper method lost considerable detail, which was retained by the high-resolution stereolithography used for the lithophanes.

Not surprisingly given the emphasis of Shaw’s lab, most of the data types the team tested have a strong chemistry/biochemistry vibe. However, Shaw thinks the technique should be exportable to any subject, ranging even beyond science into art, history and other areas where images and graphics are used. One potential disadvantage of the lithophanes is that they are suitable only for monochrome data. But the team believes that some kinds of colored data, such as heatmaps and 2D color plots, could also translate into combination tactile/visible lithophanes, if the colored data can be converted into a monochromatic grayscale image of increasing brightness.

Bringing collaborators together

To Shaw, one potentially rewarding aspect of the use of lithophanes for data that his team has explored is the potential to bring sighted and blind collaborators closer together in the day-to-day practice of science.

“The neat thing about the tactile graphics that light up with picture-perfect resolution is that everything I can see with my eyes, another person who is blind can feel with their fingers,” Shaw observed in a press release accompanying the research. “So it makes all of the high-resolution imagery and data accessible and shareable, regardless of eyesight. We can sit around with anyone, blind or sighted, and talk about the exact same piece of data or image.”