![]()

An image of a tree based on lidar point-cloud data (left), and the same image coverted to a hologram (right). [Image: Jana Skirnewskaja]

A team of researchers in the United Kingdom has developed a prototype head-up display (HUD) for automobiles that would pipe in point-cloud data from the car’s lidar system, convert the data to ultra-high-definition 3D holographic images, and beam the images directly to the driver’s eye box, creating an augmented-reality (AR) experience (Opt. Express, doi: 10.1364/OE.420740). The researchers believe that, by selectively embedding AR elements into the driver’s field of view, the panoramic holographic projection scheme they’ve developed could ultimately enhance auto safety, by alerting drivers to otherwise hidden obstacles in the road ahead.

From ghost images to full AR

Head-up displays in automobiles—in which the driver can access vehicle control information without taking her eyes off the road—are actually not a new thing. The first such in-auto system showed up in the Oldsmobile Cutlass Supreme, by General Motors, in 1988. That system was a simple monochrome display that was reflected off of the car’s windshield. Since then, advances in technology have added color and boosted image quality for this automotive add-on. Yet even today, such displays mainly provide “ghost” images hovering immediately off the windshield, and showing information such as speed, fuel levels and the song currently streaming through the car’s speakers.

Creating augmented reality for HUDs is a tougher proposition. For AR, elements of a continually changing external environment need to project into the driver’s eye box, the roughly 15×15-cm area immediately in front of the driver’s eyes that allows a full view of the scene. The technology needs to be multifocal, providing a realistic view of objects at various depths and distances—a far cry from the static positioning of speed information on a windshield. And, of course, the system needs to actually be useful, providing information (for example) about possible road hazards without overwhelming the driver with unnecessary AR clutter.

Marrying lidar and holograms

As a result, many see holographic displays—which can project panoramic 3D images of scene elements at various distances to the eye box, to be processed by the lens of the driver’s eye—to be the future of automotive HUDs. The research team behind the new work, including scientists from the University of Cambridge, the University of Oxford and University College London, has now figured out a way to tie such a panoramic holographic display system to data from lidar, the laser-ranging technology increasingly being developed for autonomous vehicles.

The system begins by reading lidar point-cloud data—the millions of laser-pulse returns used to build up a 3D model of the scene ahead—into a custom-written computer algorithm designed to pick out specific target objects in the scene. The lidar data corresponding to the target objects are then fed into another algorithm to build computer-generated diffraction patterns that can be used to create holographic images.

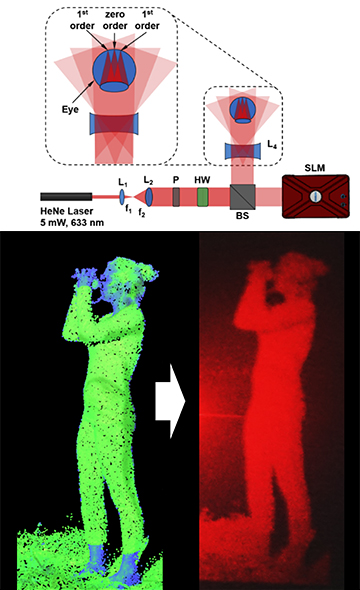

The research team’s setup (top) used a phase-modulating spatial-light modulator to convert diffraction data from a lidar point-cloud model (bottom left) into a 3D hologram (right) that could be sent to the driver’s eye. [Image: J. Skirnewskaja et al., Opt. Express 29, 13681 (2011) / University of Cambridge]

The setup to build those 3D images of objects from the diffraction data, and then project the images to the user’s eye box, starts with a 633-nm, 5-mW helium–neon laser. The laser’s beam is sent through a series of lenses, a polarizer, a half-wave plate and a non-polarizing beam splitter to an ultra-high-definition, phase-modulating spatial light modulator (SLM). The SLM modulates the laser light with the diffraction data extracted from the lidar point-cloud objects to create computer-generated holograms of the objects, which are then projected out of the SLM and routed by the beam splitter toward the user’s eye.

Alerting drivers to hidden hazards

The system—not yet tested in an actual automobile—was used with point-cloud data from a lidar scan of Malet Street, a busy London thoroughfare. The team was able to demonstrate the reconstruction and projection of 2D images on a screen, and of 3D in-eye images, using the eye’s lens as the projector. The team says that the setup allows virtual holographic objects to be aligned with objects in the real-world view, and at different depths, thereby creating the potential for AR in an automotive head-up display.

While the notion of AR in a vehicle is cool in itself, the researchers believe that their prototype system, if fully developed, could enhance auto safety. For example, armed with sufficiently dense lidar data and drawing the right elements from it, the system could provide images that alert the driver to street signs or potential hazards or obstacles in the road ahead that might otherwise be hidden from view.

Right now, the team is working on miniaturizing the optical components to fit into a vehicle, and then taking the imaging system on an actual road test. The researchers also hope to build new computer routines to provide multiple layers of different objects that can be arranged at different places in the driver’s vision space. The ultimate goal, according to Jana Skirnewskaja—a Cambridge Ph.D. candidate and the study’s first author—is to provide panoramic, real-time holographic projections that “act to alert the driver but are not a distraction.”