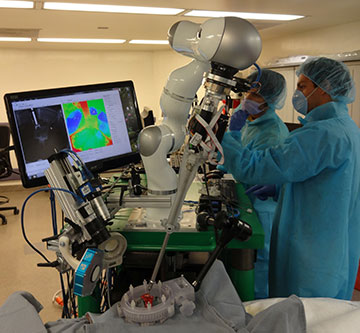

First author Azad Shademan and coauthor Ryan Decker, of the Children’s National Health System, during supervised autonomous in vivo bowel anastomosis performed by the Smart Tissue Autonomous Robot (STAR). [Image: Axel Krieger] [Enlarge image]

In a triumph of cutting-edge optics, machine vision and other technologies, a team from the Children’s National Health System and Johns Hopkins University, USA, has built a robot reportedly capable of performing autonomous surgery on soft tissue (Sci. Transl. Med., doi: 10.1126/scitranslmed.aad9398). The machine, christened the Smart Tissue Autonomous Robot (STAR), undertook complex surgery to repair intestinal tissues in living pigs—with results that scored better on multiple metrics than similar operations by expert human surgeons.

Soft-tissue challenges

Robot-assisted surgery, with robots under human manual control, has found increasing use in a variety of settings. But fully autonomous robotic surgery, particularly for soft tissues, has proved a far tougher problem. That’s because soft tissues tend to deform in real time as surgery proceeds, in ways that have represented a challenge for sensors and data processing in dynamic surgical environments. And programming the human surgeon’s hand-eye coordination—as well as implementing the dexterous knot-tying and other motions that are common currency in the operating room—constitutes a significant engineering challenge.

To meet these challenges, and create a machine capable of autonomous surgery on soft tissues, the research team, led by Peter Kim of the Children’s National Health System, began with an existing, commercially available robot platform—and tricked it out with some impressive sensor technology. The core of the STAR system is a robotic arm, from the KUKA Robotics Corporation, that has seven degrees of freedom in movement. The team added a sophisticated articulating suturing tool that essentially provided another degree of freedom, fitting out the robot to perform suturing operations in cramped spaces—as well as force sensors for optimizing suturing tension as the surgery proceeds in real time.

3-D visual tracking

The most sensitive suturing engine in the world, however, would be useless if the robot couldn’t see what it was doing. For that side of the equation, the team’s STAR system combined two optical systems. The first was a near-infrared (NIR) camera, leveraging night-vision technology, that was capable of capturing 2-D images at 2048×1088-pixel resolution and at 30 frames per second (fps).

The second was a Raytrix R12 plenoptic, or light-field, camera, adapted specifically for the STAR system and capable of capturing virtual 3-D images at a 10-fps rate and a 2008×1508 resolution. (Light-field cameras commonly use an array of microlenses to capture detailed information about the complete 4-D light field, which can then be computationally processed for detailed 3-D imaging.)

Fixing the “garden hose”

With the platform fully developed, the team programmed into the robot a consensus of the best surgical practices for the operation in question: anastomosis, or suturing to repair a rupture in intestinal tissue—“like trying to put together a garden hose that has been cut,” in the words of team member Ryan Decker. They then set the robot to work. For the study, STAR operated in a “supervised autonomous” mode, with the robot conducting the surgery, overseen by the team members.

The surgery, performed on pigs, began by marking the intestinal tissue with biocompatible NIR-fluorescent markers at multiple points; an LED ring light mounted on the robot was used to excite the markers to fluorescence. The image of the fluorescent markers captured by the 2-D NIR camera was then projected onto a 3-D point cloud derived from data from the plenoptic camera, allowing a suture plan to be computed and mapped to visual data.

As the surgery proceeded in real time, the faster frame rate of the NIR camera, tracking the fluorescent markers, was used to signal dynamic changes. Those changes were then registered to the changing point cloud repeatedly computed at a somewhat slower frame rate by the plenoptic camera, to provide sufficient 3-D depth for STAR to work with.

Superior to human surgeons?

The result was a continually changing 3-D view that the robot could use to respond to position changes in the soft tissue as the suturing module undertook its intricate procedure. That enhanced vision—which, says team leader Kim, allows the robot to see “more than what a human being could see”—helped provide results superior to human surgeons, according to the researchers.

STAR was unquestionably slower than a talented human surgeon doing the same procedure. In metrics such as consistency and average spacing of sutures, the pressure tolerance of the sutures, and the number of mistakes requiring real-time correction, however, the autonomous robot clearly outstripped results both by expert surgeons and previous robotic-assisted surgery. All of the animals survived the surgery without complications.

The team has, according to Kim, filed applications for “six or seven patents” around the technology. And, while he doesn’t envision completely autonomous, unsupervised robotic surgery any time soon, he does see a bright future for STAR as a supervised surgical tool. One of the big benefits, he believes, is that it provides potentially global access to the “best surgeon” and best surgical techniques for a given procedure. “If you’re able to program something like that into a machine,” says Kim, “it could potentially be available to anyone anywhere in the world.”