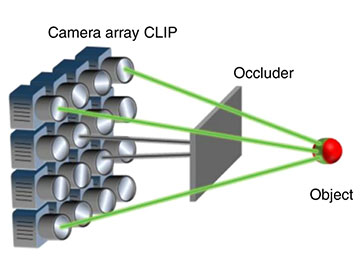

CLIP (compact light-field photography) can see through severe occlusions. Each camera only records partial information of the scene. [Image: X. Feng et al., Nat. Commun. doi: 10.1038/s41467-022-31087-9 (2022); CC BY 4.0]

Researchers in China and the United States have created a new type of 3D camera system inspired by the phenomenal sensory abilities of flies and bats. The framework behind the system—called compact light-field photography (CLIP)—employs a camera array that mimics a fly’s compound eye, along with echolocation-like flash lidar (Nat. Commun., doi: 10.1038/s41467-022-31087-9).

CLIP enables snapshot 3D imaging with an extended depth range that can detect objects around corners or otherwise hidden behind obstructions. The technology may benefit applications where high-speed 3D imaging is desired, including vision for autonomous vehicles and robotics.

The dimensionality gap

In terms of motivation, Xiaohua Feng at Zhejiang Laboratory, China, and his colleagues Yayao Ma and Liang Gao at the University of California, Los Angeles, USA, aimed to close the so-called dimensionality gap that exists between a 3D scene and the recording sensors. A 2D arrangement of the camera sensors results in capturing only a 2D projection of the scene from a given perspective. To recover depth, more measurements must be made along an extra axis of light.

Multiview measurements add an angular axis of light by incorporating an array of cameras, similar to the compound eye that allows a fly to observe the same object from multiple lines of sight. Another method, time-of-flight sensing, includes a temporal axis by using photon travel time to calculate distances, analogous to bat echolocation with light instead of sound.

Multiview time-of-flight measurements combine these two approaches, but previous systems have produced huge amounts of data that proved cumbersome to work with, even with a low spatial resolution. In addition, the scanning process was often time consuming.

Avoiding the data deluge

To address these obstacles, the researchers developed CLIP, which uses sensors of arbitrary formats to record a high-dimensional image from an amount of data even smaller than the pixel number of a regular 2D image.

The CLIP system included a streak camera as an ultrafast sensor to capture 3D time-of-flight data and a femtosecond laser on a motorized assembly to illuminate the scene. The streak camera was spatially multiplexed by seven customized lenses to mimic a camera array.

Instead of acquiring spatial information pixel by pixel, as in conventional photography methods, the CLIP system senses the whole scene in each pixel measurement with different sensitivity patterns and distributes the full spatial-measurement process into different angles. The final picture is then computationally assembled from the measurement distributed across different angles with full-fledged light-field processing capabilities.

Seeing through blind spots

3D imaging of a 2×2 grid pattern moving across the CLIP camera’s field-of-view behind a rectangular occlusion. [Image: Intelligent Optics Laboratory, L. Gao/UCLA] [Enlarge image]

In a proof-of-concept demonstration, Feng and his colleagues set up three different scenes with objects that appeared completely blocked by occlusions from a front-facing view. The CLIP system could successfully recover the occluded objects with clear separation between the objects and occluder in all of the scenes.

“The high-dimensional information recorded by the CLIP camera system can enable a 3D camera to see through occlusions and extend the imaging range of time-of-flight sensors such as LIDAR cameras,” said Feng. “For example, by leveraging light field information to synthesize a large imaging aperture, it can spot a pedestrian blocked by pillars of a car or track people moving across a crowd.”