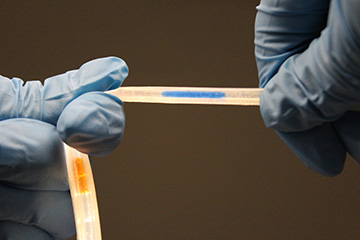

The stretchable sensor is infused with colorful dyes that act as spatial locators. [Image: Cornell University]

Distributed fiber-optic sensor systems on the market today are powerful tools for monitoring changes in strain, temperature and pressure in stiff infrastructures such as roads and bridges. Stretchable fiber optics for applications like soft robotics, however, have been more elusive.

Researchers at Cornell University, USA, led by Robert Shepherd, have now developed multifunctional, pliable optomechanical sensors capable of differentiating between various mechanical deformations (Science, doi: 10.1126/science.aba5504). The team demonstrated its stretchable skin by incorporating the sensors onto the fingers of a 3D-printed glove, successfully tracking the glove’s movements—including both force and bend—in real time.

With further optimization, the team believes this approach could find use in soft robotics, physical therapy, sports medicine and even AR/VR applications.

Stretching functionality further

This latest work is not Shepherd’s first rodeo with stretchable sensors. He and his team built on a project from 2016, in which a photodiode measured the intensity of light after being sent through a waveguide to determine when the material was deformed. With this work, Shepherd, co-lead author and Ph.D. student Hedan Bai, and their colleagues wanted to push the limits of single stretchable sensors to detect more than just intensity.

“Soft matter can be deformed in a very complicated, combinational way, where a lot of deformations happen at the same time,” explains Bai. “Many previous stretchable sensors are not able to differentiate the many deformation types with one output and therefore need extended system integration or machine-learning algorithms.”

Color and intensity

To beef up the functionality of their soft sensor, the researchers took a cue from nature—specifically, how unique receptors in mammalian skin respond to different kinds of stimuli, and how those signals are shared in the nervous system to create a full picture of what is touching the skin.

The Cornell team’s skin-like sensor takes advantage of light’s ability to carry rich information—including a wide wavelength spectrum, intensity, polarization and direction—so that more information is detectable from a single optical transmission line. The sensor, dubbed a stretchable lightguide for multimodal sensing (SLIMS), measures this information by reading geometric changes in the optical path of light as it travels through the light guide.

The elastomeric tube design features a unique dual-core structure—one transparent and one infused with dyes. The first core indicates intensity, and the second uses color to share a complete picture of how the material is deforming, where, and how much—all without a machine-learning algorithm.

Fits like a glove

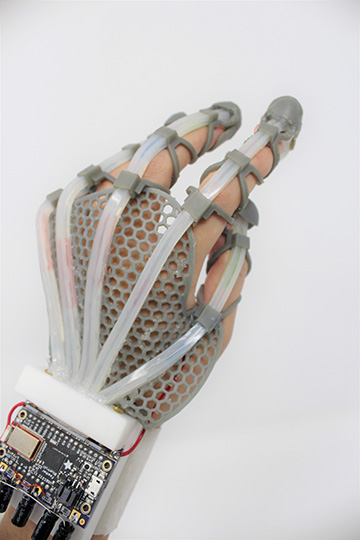

Researchers designed a 3D-printed glove lined with stretchable fiber-optic sensors that use light to detect a range of deformations. [Image: Cornell University]

Putting the design’s multifunctionality on display, Bai and her colleagues constructed a 3D-printed glove with a sensor running down the length of each finger. A white LED shines from the fingertips of the glove, and a RGB sensor chip measures the outputs on the wrist for each finger.

As the glove moves, the dyes light up and act as spatial encoders. Data is sent wirelessly via Bluetooth to a computer program of Bai’s design, which uses a mathematical model to separate the complex deformation happening in the glove in real time. “With a single SLIMS sensor,” Bai says, “we can reconstruct the motion and forces of each of the three finger joints.”

Moving to market

The implications of these results, Bai says, are very exciting. “Right now, sensing is done mostly by vision. We hardly ever measure touch in real life. This skin is a way to allow ourselves and machines to measure tactile interactions in a way that we now use the cameras in our phones.”

She envisions using this approach to enrich AR/VR experiences, prosthetics, surgical tools and biomechanical analysis. Indeed, the Cornell team is already pursuing commercialization through Shepherd’s company the Organic Robotics Corporation, which plans to release wearable products as soon as 2021. Bai has received interest in the technology for player tracking in the baseball industry, a field that has benefitted from motion-tracking technology in the past but until now has been unable to capture force interactions.

The research was supported by the National Science Foundation (NSF), the Air Force Office of Scientific Research, Cornell Technology Acceleration and Maturation, the U.S. Department of Agriculture's National Institute of Food and Agriculture, and the Office of Naval Research.