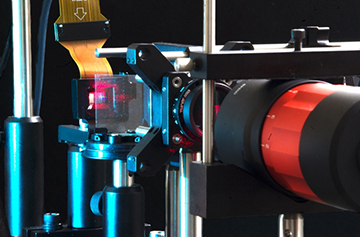

Researchers at Stanford University have developed a holographic display prototype tuned by an artificial neural network, and used it to compare images from coherent, partially coherent and incoherent light sources. [Image: Stanford Computational Imaging Lab]

Virtual-reality and augmented-reality (VR/AR) headsets are becoming increasingly popular among gamers and other users. But their displays are currently limited to two dimensions, projecting a 2D image onto each eye. Holographic displays, on the other hand, offer a route to 3D—but they remain costly and are hampered by speckle.

Researchers in the United States have now demonstrated the basis for a more practical kind of holography that they say could be used for headsets as well as potentially heads-up displays in vehicles. Combining artificial intelligence with partially coherent light sources, the team has shown how to generate holographic images that are sharp, bright and speckle-free (Sci. Adv., doi: 10.1126/sciadv.abg5040).

Ironing out the speckle

To date, almost all holographic displays have relied on coherent light from a laser. But aside from generally being quite expensive, these systems suffer from speckle—the random fluctuations in intensity resulting from the interference of light waves with the same frequency but varying amplitude and phase. This impairs image quality and can potentially harm a person’s eyes.

One way to try and overcome this problem is to superimpose different speckle patterns by using either temporal or spatial multiplexing. But this approach generally relies on mechanically moving parts and complex optics, making it unsuited to wearable computing systems like AR/VR headsets.

In the latest work, Gordon Wetzstein and colleagues at Stanford University, USA, instead exploit partially coherent light sources. To do so, they developed a new mathematical model that describes the propagation of waves from such sources, which can be both spatially incoherent (owing to a finite emission area) and temporally incoherent (due to quite broad emission spectra).

Neural network

The researchers’ experiment involved directing the output from a number of different light sources at a spatial light modulator, and in each case using a camera to record the holographic image formed on the far side of the modulator. Repeating this process many times, they used a neural network to compare the image against an ideal target image, so that the network could tweak the spatial light modulator and progressively narrow the difference between the two images.

The team used three different types of light source to generate a variety of 2D images, including ones of a test chart used by the United States Air Force in the 1950s to measure resolution. The researchers found, as expected, that a laser produced the sharpest images—but also lots of speckle. Light-emitting diodes, in contrast, resulted in poor resolution but very little speckle.

They also tried what are known as superluminescent LEDs; these devices produce collimated light like a laser, but operate over a fairly broad spectrum like an LED. The superluminescent LEDs turned out to constitute a happy compromise between the other two sources, being able to display sharp images with significantly less speckle than a laser.

From 2D to (pseudo) 3D

What’s more, Wetzstein and colleagues also found they could generate a kind of 3D holographic image. They did this by using two cameras to focus on objects located close by and further away, capturing both focal planes simultaneously and using the imperfections from both images to adjust a single spatial light modulator. Here, too, they found that superluminescent LEDs could produce focused images that both are sharp and include little speckle.

True 3D holography, the team points out, will require doing away with the camera currently used to optimize the imaging, since otherwise it would not be possible to display images with continuous depth. Instead, the authors say, the wave propagation model itself would be represented by an artificial neural network, which would be initially trained using a camera and then left to tune the spatial light modulator.

According to the researchers, a miniaturized version of their current prototype could be used directly to make virtual reality headsets. But applications involving glasses-free displays, they suggest, will take a bit more work, since these would require larger-scale display panels. As Wetzstein points out, the size of pixels for holographic displays needs to be on the order of the wavelength of light. This would mean putting together many more pixels than they have to date—which, he says, “is too difficult and costly right now.”