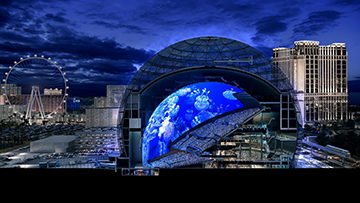

The Sphere, located in Las Vegas, USA. [Image: Sphere Entertainment] [Enlarge image]

On the night of 4 July, people in Las Vegas, USA, were met with a surprise. The Sphere entertainment venue—a giant orb about 366 feet tall—lit its 1.2 million LED light fixtures for the first time. Reportedly the biggest spherical structure in the world, the Sphere displayed animations showing fireworks, the American flag, the moon and even a blinking eye on its 580,000-square-foot exterior.

Prior to the grand opening of the Sphere’s indoor theater on 29 September—when rock band U2 will start its 25-show residency—OPN spoke with Stuart Elby, senior vice president for advanced engineering at MSG Ventures, a division of Sphere Entertainment that’s developing the 18,000-seat arena.

What is the genesis of the Sphere? What was the motivation behind building a venue of such unprecedented scale?

Stuart Elby: The Sphere is the brainchild of our chairman, Jim Dolan, who runs Madison Square Garden, the venue that most people associate with sports franchises like the New York Rangers and Knicks. The company also owns a lot of facilities including The Theater at Madison Square Garden, the Chicago Theater and Radio City Music Hall.

When Jim was thinking about what’s next for the company, he got to thinking about the history of live theater. And he thought, well, it really hasn’t changed since Ancient Greece. I mean, theaters did adopt new technologies—things like PA systems, microphones and LED screens. But they really haven’t changed dramatically, while other technologies surrounding us have.

On 4 July 2023, the Sphere displayed "hello world" as its exterior lit up. [Image: Sphere Entertainment]

So we asked ourselves, can we build something that leverages the latest technology? Maybe even invent new technology specifically for the theater? And then we said, well, we should also make it a sphere. Why? Why not? It would be different.

What are the advantages of having an entertainment venue that’s a sphere?

In a spherical structure that has a stage, you would have the stage on one side, making the structure more of a hemisphere. For our sphere, the stage starts at about five-eighths of the sphere from the equator, above the ground. And then the seats follow the curvature of the sphere going up. It’s a very steep rake as you go up the sphere. And it seats about 17,500 people in the permanent seating.

With this design, even when you’re in the upper gallery, you don’t feel that distant from the stage because you’re looking down. It’s not like a stadium, where the stage is on one end and you’re sitting at the other end, needing binoculars to see what’s happening on stage. With a steep rake, everyone feels a bit more connected.

Another thing is the Sphere has a 250-megapixel hemispherical LED screen. So the resolution of that thing is great, as your visual acuity is related to what you see per degree. And obviously, the farther away something is, the more you can fit to that degree. So the reason we came up with about 250 megapixels when designing the screen is, in terms of the seats’ distance to the screen, that number of pixels gives you more than 20/20 vision. So it looks like you’re seeing reality. It doesn’t look like you’re seeing the screen.

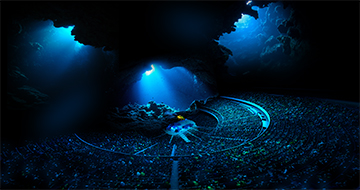

Rendering of an underwater video playing inside the Sphere. [Image: Sphere Entertainment] [Enlarge image]

And by putting the screen all the way over and around you, you feel like you’re inside of it. The theater kind of plays tricks with your mind, which actually works. Your brain tends to lock on to a horizon line, and that’s how you steady yourself—which is why some people don’t feel well on roller coasters. It’s hard to maintain the horizon line as you’re floating around. But because the Sphere is an artificial environment, we can put the horizon line wherever we want, therefore making you feel like you’re moving however we want you to. So that’s the science that went into the screen.

What about audio?

We needed immersive sound to go with the screen. For birds flying overhead, for example, you want the sound to be coming from those birds and not some speakers in front of you. So we had to come up with a way to put speakers everywhere and focus beams of audio that could follow what’s shown.

But you don’t want the speakers in the way of the screen. So we had to invent a way to do phased array beamforming of audio through a transparent LED screen with all the speakers behind the screen. Those are the fundamental things that we worked on: coming up with a way to make speakers invisible, focus sound, have three-dimensional audio objects that can track video objects and make them in a lifelike resolution.

Your title is senior vice president for advanced engineering at MSG Ventures. How is your role related to the Sphere?

“The artists that are coming to put on shows for the customers sometimes have wild ideas that would require a technology that doesn’t exist.” —Stuart Elby

It’s about the user experience. The artists that are coming to put on shows for the users, or customers, sometimes have wild ideas that would require a technology that doesn’t exist. They would come to my team to invent things that didn’t exist before. A counter to that would be stage lighting. We didn’t have to work on that—you can go and buy nice LED stage lights and mount them. That’s not new technology. But we had to invent the screen, we had to invent the audio system.

After the video and the audio, we decided we wanted the seats to have some motion. So that required a whole new sort of haptic system because we wanted the seats to have a wide range of responses, like the low-wave-like feeling, not just shaking.

Then to make it fully immersive, we had to create the smell and wind and temperature changes. If you go to theme parks like Disney World or Universal Studios, they put things in the back of the seats or close to you to just blow air on you. That’s not very realistic. I mean, it’s fun, but if you had to sit through that for an hour, it would be very annoying, right?

We wanted to create what wind really feels like. You know, it never just comes from one direction—it sort of buffets, lulls and calms. And it makes sounds. And likewise, with smell, we made it very realistic. So we had to invent a whole new type of platform to provide a 17,000–18,000 person audience with a real wind and smell. That’s what my team does. We have to invent the technology for the Sphere.

Inventing new technology sounds like a daunting task.

The Sphere displaying the surface of the moon. [Image: Sphere Entertainment]

I have really smart people that each have their own expertise. But at the same time, we’re not Bell Labs. We don’t have 4,000 Ph.D.s. So our method is more like a VC [venture capital] accelerator. We say, “This is what we need. Is there anyone in the world working on anything like this, even if it’s not for this purpose?”

Audio is a great example: we found a startup company in Berlin, Germany, that was using phased arrays called wave field synthesis, which is similar to optical constructive interference. The company was developing a system for train stations so that announcements didn’t sound unintelligible, and they installed those into some of the stations in Germany. The technology is great because if you’re standing anywhere on the platform, hundreds of feet away from where the speakers are on the wall, you hear the relevant information for that platform. And out of the same speaker, you hear something different if you’re on a different platform. And we said, “That’s the technology.”

But we had to turn the system into something concert grade. It has to be able to carry a full concert-grade orchestration system with all the bells and whistles you need for a concert hall. So we partnered with the company and we invested in them. And we basically codeveloped with them and created the audio system for the Sphere.

So that’s our approach. We did something similar on LEDs, wind and smell, where we found people that had similar fundamental technologies and then worked with them through investment and other mechanisms to get what we needed.

Your background is in telecommunications and data center design, which seems quite different from what you do now. How did you find yourself working on the Sphere?

“The underlying theme [of my career] has always been the optics, which is all physics and math.” —Stuart Elby

I got my undergraduate degree in optical engineering from the University of Rochester, and my first couple of jobs were optical engineering–related work in different industries. And then when I went to Columbia University for a Ph.D., I got into telecommunications because that was during the big bubble of communications, when a lot of fun stuff was going on. But really, the underlying theme has always been the optics, which is all physics and math. Then through my career, I also learned how to run a business and product development and all that.

I think that combination is what attracted MSG Ventures. I didn’t seek them out; they found me. I was sitting in Palo Alto, California, because at that time, I was working at Infinera, which is a photonics integrated-circuit company. Then I got a random phone call from an internal recruiter at MSG, and she was like, “Hi, do you know what MSG is?” So I said, “I grew up in New Jersey, so of course I know.” Then she said, “We’re going to invent a new type of theater. I saw you on LinkedIn. And I know you’ve never done anything like this, but it looks like you have the right background and might fit into our culture.”

So after a bunch of interviews and things, I got the job. I’m late in my career at this point, I’ve worked for a lot of years. So I just wanted to do something really exciting and new. I don’t need a 20-year career—I want a project that’s really exciting. And the Sphere was it. I’m doing all new stuff.

The Sphere against the backdrop of the Las Vegas skyline. [Image: Sphere Entertainment] [Enlarge image]

And because the chairman is the one who is pushing for it, this one was going to get done—unlike a lot of projects in industry that don’t get to the finish line because they run out of money or business plans change and they get canceled. So I said, okay, the project isn’t going to get diverted. And it’s gonna give me an opportunity to just learn, which I love. So I joined at the very end of 2018, and I’ve been having a great time.

Do you think your previous work in telecommunications has helped you in your current role?

It did. The Sphere is really just a giant data center that drives all this technology. When I first joined the company, I had one person reporting to me who previously worked at Cablevision in the cable industry. And since he is a wireless/wireline expert and I am somewhat of an expert, we had to write the architecture for the Sphere’s network. So that was my first task: developing, documenting and designing the architecture for the wireless and wireline network in the Sphere, which was right in my wheelhouse.

But then I had to pick up audio and camera design. Again, the issue was that the Sphere has many pixels. At this point, there are 12K studio cameras available, but most of them are still at 8K. But even then, 8K in studio language means 8K across 4K pixels, as they’re always for rectangular sensors. So 12K would be 12K by 6K. But we needed something equivalent to 16K by 16K.

I’ve never done camera design. But I have an optics background. So I inherited a couple of people who were camera experts, and we designed the first set of cameras, a whole bunch of really interesting camera arrays. The camera work to develop a single camera that could shoot at the Sphere’s required resolution was led by Deanan DaSilva, a genius when it comes to digital camera and sensor design. Now we have a camera that can shoot the full resolution over the full field. To make that happen, we had to go to a number of different places that had the pieces we needed and put them together.

“What I found once I started working in audio is that, fundamentally, it’s really just like optics.” —Stuart Elby

Audio was completely new to me. But what I found once I started working in audio—especially because we’re doing phased arrays—is that, fundamentally, it’s really just like optics. I mean, yes, there are differences between longitudinal waves and transverse waves, and the equations aren’t the same. But the concepts and the underlying physics are really exactly the same. You’re creating constructive and destructive interferences by having lots of little sources that you phase align very carefully. And in doing that, you can steer a beam, change the size of the beam, focus the beam and even create parallel beams, which are really cool.

Parallel beams? Can you elaborate?

They’re not really practical, but we did create parallel beams at a big studio in Germany. The size of the audio beam is basically defined by the size of the speaker. To picture it, imagine a speaker making a rectangular wave. When it leaves, it does diverge the same way a laser will kind of diverge, but very slowly. So at 300 yards away, you could hear almost nothing, and then you walk into the beam and suddenly hear a certain loudness. And I would walk right up to the speaker and it would be the same loudness as it was 300 yards away because all the energy just stays in this collimated beam. That’s not a lot of use, but that’s the level of control we can have.

Mostly what we’re doing is creating beams that can get into places that usually don’t have good sound in a venue, like underneath a balcony or corners. It’s going back to the roots to physics and math of waves.

MSG developed a camera called Big Sky. Can you tell us what it does?

The Big Sky camera. [Image: Sphere Entertainment]

So we needed a sensor that could have as many or more pixels than the screen. Otherwise, you interpolate and lose resolution. So we had to design a sensor that would be ultrasensitive because, for example, the director may want to shoot a video in low light.

The camera has a 316-megapixel sensor, and it’s a three-by-three-inch square as opposed to a rectangle. We don’t fabricate it ourselves because we’re Madison Square Garden. Deanan, who was the lead designer for the Big Sky camera, went out and surveyed the field, and we found a company that could actually produce what we were looking to produce.

It took a long time to get it going because I mean, a cinema camera is different from your phone camera. It’s not just the resolution but the full color depth—it has be 10 or 12 bits deep. It also has to have the right color range. We’re showing things at 60 frames per second, but you want to go 120 frames per second. So the question becomes, can you get a sensor to do that? Then you need the camera body with the electronics to power the sensor, pull the data off and cool it—because the sensor is going to get hot. Cameras need to work in bad places, it’s not going to stay in an office.

Bad places?

To test our cameras, we took them to Antarctica for a solar eclipse about two years ago. The returned footage is amazing, a solar eclipse across Antarctica. There are effects and colors that you never get here in the atmosphere, just because the atmosphere in Antarctica is so different. So after withstanding those conditions, the cameras got taken into jungles and mountains because, shooting films, the camera has to have the weather resistance.

What about other components of the camera?

The Big Sky camera. [Image: Sphere Entertainment]

We’re going to make multiple lenses over time, but for the first one, we wanted a lens whose one shot would cover the entire screen. Think of it as a giant 180° or 185° fisheye lens.

But usually in a fisheye, the resolution starts to fall off as you get to the edge. We didn’t want this to happen with ours because of how the visual part of the Sphere is laid out. It covers about 165° and stops when it gets to close to the seats at the top. So the prime focal point when you’re sitting is not the apex of the Sphere—it’s going to be closer to the edge. So the edge has to be the highest resolution, whereas for a typical camera, the highest-resolution point would normally be the center of the lens. Therefore, a very sophisticated multielement lens had to be designed.

And then special coatings were needed because the big fisheye lens has to focus the image onto a three-by-three-inch plate. You get a lot of reflections and glare, so it requires new types of specialized coatings. So just for the camera, we had to work with different vendors because there wasn’t one vendor that could do everything for us. We partnered with a sensor company, a lens company, a coating company, a cooling-system company and a company that can make the housing—different partners to put it all together.

What about data storage?

The sensor is creating 60 gigabytes per second of data. When you’re in a remote location, what do you do with that? So we had to create a recording system, which basically pulls the data off the sensor and stores them in some sort of device that could then get mailed—not an email but real mail—back to the studio.

Right now, the maximum we can store reliably is 30 gigabytes per second. And that’s fine. That still gives us 60 frames per second. But if you’re shooting for five minutes and saving 30 gigabytes per second, that’s a ton of data, right? So that required a whole bunch of new intellectual properties and inventions. So it’s been quite an adventure to get Big Sky.

What other applications is MSG Ventures looking into for all the new technologies that the company developed with the Sphere?

“We’ve generated a large patent portfolio, and I’m very excited to see what could happen with it.” —Stuart Elby

We’re an entertainment company by definition. So what we really care about is entertainment. But we’ve generated a large patent portfolio, and I’m very excited to see what could happen with it.

You know, when we create wind, we’re using the Venturi effect—basic physics to create wind without using fans. People use fans on Hollywood sets to make hurricane scenes. But those giant fans are super loud. I mean, you can’t record over them. And we would need a huge array of them, so fans were not ideal.

So we had to create something that was quieter. We’re using something similar to a Dyson fan—72 of them that are huge. Okay, but they still make noise. So we wondered, what can we put into the cone that’s shooting the air around to lessen the noise? We thought about active noise-canceling headsets, but the technology wouldn’t work across the space since there are too many variations. We would have to have something passive.

We connected with a couple of audio engineers at NASA who work on deadening the sound from jet engines. And we just batted ideas around and eventually came up with something novel that the engineers may use now as well. What we created has a very interesting physical structure, like that of a honeycomb. We basically created half-wave interference of various frequencies and dropped the noise level down by a good 12 dB, which is significant.

Speaking of all the physics and math, the Sphere’s website has a page on “The Science”. Why did the company think it was important to put the science out there for the public?

A rendering of the Sphere's cross section, showing the inside of the venue. [Image: Sphere Entertainment] [Enlarge image]

The page displays our vision of using the latest technology and underlying science to create a new type of entertainment venue. And our CEO and chairman [Jim Dolan] felt it was very important to express that. I mean, maybe 80% of people won’t care. They’re going to come see a good show. But there will be that 20% who are going to walk into the building and go, “wow,” and wonder how it got put together— because even the architecture of the Sphere is very, very novel.

There’s a building inside the exosphere that you see, and it’s a self-supporting sphere, which is cool. The theater inside is an unsupported sphere, similar to a keystone bridge. We built it by putting a huge skyscraper scaffolding up—a big central ring—and then put “pie” pieces against it. And when the pieces were all together, we took the scaffolding out. The whole thing only moved a fraction of a millimeter. And now it’s self-supporting.

We put the science equations on the web. And when you go into some of the bars and lounges that are part of the theater, those equations will be showing up on the walls. It’s very futuristic-looking—sort of high tech inside.

We’ve talked a lot about the technological advances. In your opinion, what would be the Sphere’s biggest technological achievement?

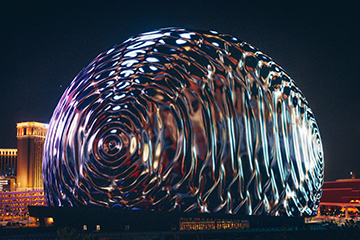

The Sphere's exterior displaying wave interference patterns. [Image: Sphere Entertainment]

I think the audio was probably the biggest single thing, and then allowing audio through the LED screen. The LED screen itself was just a lot of brute-force engineering. We didn’t invent a new type of LED—it was a matter of, “can you mount LEDs on a very perforated structure that has all the wires so that there’s enough air gap for sound to go through?” And the scale made it hard. I mean, for the resolution, we have to maintain one-millimeter tolerance across the entire screen from the left side to the right side of the Sphere. The screen is four acres, and holding a tolerance of a millimeter on that scale is tough.

But those are hard engineering problems, not inventing something novel. The audio was totally novel. And I think we’re going see more and more companies doing wave field synthesis and beamforming. You’re starting to see it even at home. The latest sound bars for televisions do pretty cool things using reflections off ceilings and walls. We’re just doing it in a space way bigger than living rooms, with a lot more speakers.

The wind thing is very cool, too. Again, it’s derivative in that we had to think about how to come up with something like a Dyson fan and Venturi system for pushing compressed air out little nozzles that creates a vacuum.

We found something in the large shipping world. It turns out that when a big freighter ship for gas, oil or some other toxic chemical is done unloading, it still has fumes in the tank that could be dangerous or explosive. Someone invented these huge aluminum or steel rings that are basically Venturi systems, and they’re made to go on top of big shipping portals on top of the boats. And people use it to suck out gas as quickly as they can by putting compressed air through it. When we discovered it, we said, “that’s it.”

“So a lot of the process was to find useful technologies that are totally unrelated in terms of their use.” —Stuart Elby

Then we just had to take that system and invent a cone to put over it, so it would direct the air. We discovered that you can actually get the cones to entrain each other and create single sheets of air. But again, the foundational technology was designed to get rid of dangerous chemicals and smells out of ships. So a lot of the process was to find useful technologies that are totally unrelated in terms of their use.

And what about challenges? If you had to pick one, what was the biggest challenge that the team faced, and how did the team overcome it?

I think that every one of these technologies has its own challenges. And the hardest part of the project was that all these technologies had to be integrated to provide a show. That was the hardest part because it means a compromise. I can make wind that’s 140 miles an hour, but that’s going to mess up the lighting, right? There were lots of trade-offs.

I think the hardest part has been the overall systems integration of all the technologies to create something meaningful that can offer a great experience. It’s not just one technology. It’s how we brought them together.

After talking with you, I am very excited to check out the Sphere myself.

A photo of the Sphere taken at sunrise. [Image: Sphere Entertainment] [Enlarge image]

At this point in time, people have only seen the exosphere. And I would like to just comment on the fact that we created it using standard LEDs. But what’s really clever is that we created it with “hockey pucks” that each have 48 LEDs. The exosphere is made to be viewed from a quarter mile to half a mile away, to be seen around Las Vegas. When you get too close to it, it’s just a bunch of lights. We had to figure out how to take these pucks, each one with multiple LEDs, and resolve them as pixels. Then we put them all over a trellis structure and equipped it with full-video capability—which looks really cool.

Another issue is that the lights are outward facing, so they really needed to be plastic. They couldn’t be metal because we didn’t want the Sphere to become a magnet for lightning. The other thing was that LEDs burn out. We decided that one can’t use tools to change those lights because we didn’t want someone up 300 or 400 feet with a screwdriver or wrench and then dropping it. To avoid this danger, we made the pucks in a way that, when LEDs die, someone can go up, pull off a puck and put in a new one without tools.

This is really just incredibly intelligent electromechanical engineering, how these pucks clip on and off. And the mechanical ingenuity that went into making the whole Sphere—it’s great.